Pansharpening with a Guided Filter Based on Three-Layer Decomposition

Abstract

:1. Introduction

2. Guided Filter

3. Proposed Method

3.1. Overview

- (1)

- The pixel values of the original MS and PAN images are normalized to 0–1 to strengthen the correlation of the MS bands and PAN image. Then, histogram matching of the PAN image to the intensity component is performed, and the intensity component is a linear combination of the bicubic resampling MS, denoted as , whose spectral responses is approximately covered by the PAN [7,30]. Here, the linear combination coefficients are calculated by original MS and the downsampled PAN image with least square regression [31].

- (2)

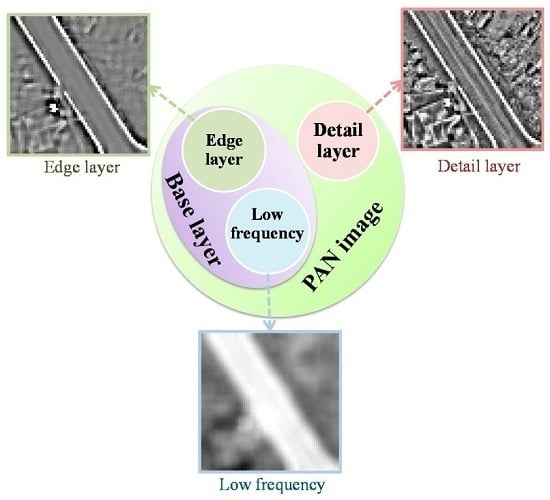

- The histogram-matched PAN image is decomposed into three layers, i.e., a strong edge layer , a detail layer , and a low-frequency layer , based on three layer decomposition technique.

- (3)

- The edge layer and the detail layer are injected into each MS band by a proportional injection model to obtain the fused image. It is represented as: , where denotes the b-th band of the fused image, is the anti-aliasing bicubic resampling MS image followed by guided filtering to suppress the spatial distortion, and here, the guidance image is the resampling MS image to preserve its original spectral information as much as possible. represents the b-band weight to determine the amount of high-frequency information to be injected, and it is represented as . The and are parameters to control the relative contribution of the edge layer and the detail layer, respectively.

3.2. Three-Layer Decomposition

- (1)

- Firstly, the guided filter is applied to decompose the histogram-matched PAN image into a base layer and a detail layer.where is the base layer, in which the low frequency layer and the strong edge layer are included. is the histogram-matched PAN image, and denotes the guided filter. Here, the guidance image is consistent with the input image, i.e., the histogram-matched PAN. Once the base layer is obtained, the detail layer can be easily obtained by subtracting the base layer from the histogram-matched PAN image:where denotes the detail layer.

- (2)

- Then the strong edges are separated from the base layer, by reason that although the detail layer is obtained, there are still strong edges remaining in the base layer, which can be clearly seen in Figure 2. It is represented as:where is the strong edge layer, denotes the Gaussian low-pass filter, and the represents the low frequency layer of the PAN image.

4. Experimental Results and Analyses

4.1. Quantitative Evaluation Indices

4.2. Experimental Results

4.3. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sirguey, P.; Mathieu, R.; Arnaud, Y.; Khan, M.M.; Chanussot, J. Improving modis spatial resolution for snow mapping using wavelet fusion and arsis concept. IEEE Geosci. Remote Sens. Lett. 2008, 5, 78–82. [Google Scholar] [CrossRef] [Green Version]

- Ulusoy, I.; Yuruk, H. New method for the fusion of complementary information from infrared and visual images for object detection. IET Image Proc. 2011, 5, 36–48. [Google Scholar] [CrossRef]

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 657–661. [Google Scholar]

- Meng, X.; Shen, H.; Zhang, H.; Zhang, L.; Li, H. Maximum a posteriori fusion method based on gradient consistency constraint for multispectral/panchromatic remote sensing images. Spectrosc. Spectr. Anal. 2014, 34, 1332–1337. [Google Scholar]

- Meng, X.; Shen, H.; Zhang, L.; Yuan, Q.; Li, H. A unified framework for spatio-temporal-spectral fusion of remote sensing images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 2584–2587.

- Ballester, C.; Caselles, V.; Igual, L.; Verdera, J.; Rougé, B. A variational model for p+ xs image fusion. Int. J. Comput. Vis. 2006, 69, 43–58. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, H.; Gong, W.; Zhang, H. Adjustable model-based fusion method for multispectral and panchromatic images. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 1693–1704. [Google Scholar] [CrossRef] [PubMed]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images. Ii. Channel ratio and “chromaticity” transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Zhang, Y. System and Method for Image Fusion. Patents US20040141659 A1, 22 July 2004. [Google Scholar]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A new look at ihs-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A fast intensity-hue-saturation fusion technique with spectral adjustment for ikonos imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Chien, C.-L.; Tsai, W.-H. Image fusion with no gamut problem by improved nonlinear ihs transforms for remote sensing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 651–663. [Google Scholar] [CrossRef]

- Brower, B.V.; Laben, C.A. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. Patents US6011875 A, 4 January 2000. [Google Scholar]

- Shettigara, V. A generalized component substitution technique for spatial enhancement of multispectral images using a higher resolution data set. Photogramm. Eng. Remote Sens. 1992, 58, 561–567. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Proc. 2006, 15, 3089–3101. [Google Scholar] [CrossRef]

- Choi, M.; Kim, R.Y.; Nam, M.-R.; Kim, H.O. Fusion of multispectral and panchromatic satellite images using the curvelet transform. IEEE Geosci. Remote Sens. Lett. 2005, 2, 136–140. [Google Scholar] [CrossRef]

- Otazu, X.; González-Audícana, M.; Fors, O.; Núñez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Burt, P.J.; Adelson, E.H. The laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Wang, W.; Chang, F. A multi-focus image fusion method based on laplacian pyramid. J. Comput. 2011, 6, 2559–2566. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, M. Image fusion using multiscale edge-preserving decomposition based on weighted least squares filter. IET Image Proc. 2014, 8, 183–190. [Google Scholar] [CrossRef]

- Fattal, R.; Agrawala, M.; Rusinkiewicz, S. Multiscale shape and detail enhancement from multi-light image collections. ACM Trans. Graph. 2007, 26, 51. [Google Scholar] [CrossRef]

- Hu, J.; Li, S. The multiscale directional bilateral filter and its application to multisensor image fusion. Inf. Fusion 2012, 13, 196–206. [Google Scholar] [CrossRef]

- Bennett, E.P.; Mason, J.L.; McMillan, L. Multispectral bilateral video fusion. IEEE Trans. Image Proc. 2007, 16, 1185–1194. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Proc. 2013, 22, 2864–2875. [Google Scholar]

- Joshi, S.; Upla, K.P.; Joshi, M.V. Multi-resolution image fusion using multistage guided filter. In Proceedings of the Fourth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Jodhpur, India, 18–21 December 2013; pp. 1–4.

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. Worldview-2 pan-sharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 26–30 April 2010.

- Kim, Y.; Lee, C.; Han, D.; Kim, Y.; Kim, Y. Improved additive-wavelet image fusion. IEEE Geosci. Remote Sens. Lett. 2011, 8, 263–267. [Google Scholar] [CrossRef]

- Zhang, D.-M.; Zhang, X.-D. Pansharpening through proportional detail injection based on generalized relative spectral response. IEEE Geosci. Remote Sens. Lett. 2011, 8, 978–982. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Yuan, Q.; Zhang, H.; Zhang, L. Improving the spatial resolution of hyperspectral image using panchromatic and multispectral images: An integrated method. In Proceedings of the 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015.

- Bro, R.; De Jong, S. A fast non-negativity-constrained least squares algorithm. J. Chemom. 1997, 11, 393–401. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Rahmani, S.; Strait, M.; Merkurjev, D.; Moeller, M.; Wittman, T. An adaptive ihs pan-sharpening method. IEEE Geosci. Remote Sens. Lett. 2010, 7, 746–750. [Google Scholar] [CrossRef]

- He, X.; Condat, L.; Bioucas-Diaz, J.; Chanussot, J.; Xia, J. A new pansharpening method based on spatial and spectral sparsity priors. IEEE Trans. Image Proc. 2014, 23, 4160–4174. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Proc. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference on Fusion of Earth Data: Merging Point Measurements, Raster Maps and Remotely Sensed Images, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103.

| Evaluation Indices | Definitions | Meaning |

|---|---|---|

| CC [18,32] | the bigger the better | |

| UIQI [35] | the bigger the better | |

| RMSE [18] | the smaller the better | |

| ERGAS [18,34,36] | the smaller the better | |

| SAM [34] | the smaller the better | |

| Proposed MCC | the bigger the better | |

| Proposed MUIQI | the bigger the better |

| Quality Indices | Ideal Value | Fusion Methods | ||||

|---|---|---|---|---|---|---|

| GS | PCA | AIHS | AWLP | Proposed | ||

| CC | 1 | 0.9370 | 0.8111 | 0.9509 | 0.9451 | 0.9508 |

| RMSE | 0 | 57.8762 | 86.2993 | 50.2334 | 53.6589 | 47.6828 |

| UIQI | 1 | 0.9129 | 0.7982 | 0.9381 | 0.9435 | 0.9500 |

| ERGAS | 0 | 2.7924 | 4.2949 | 2.4145 | 2.5517 | 2.2823 |

| SAM | 0 | 3.9072 | 6.0003 | 3.6110 | 3.5631 | 3.4877 |

| MCC | 1 | 0.9226 | 0.8546 | 0.9299 | 0.9323 | 0.9315 |

| MUIQI | 1 | 0.8869 | 0.8073 | 0.8958 | 0.8960 | 0.8975 |

| Quality Indices | Ideal Value | Fusion Methods | ||||

|---|---|---|---|---|---|---|

| GS | PCA | AIHS | AWLP | Proposed | ||

| CC | 1 | 0.9734 | 0.9739 | 0.9649 | 0.9691 | 0.9726 |

| RMSE | 0 | 9.4454 | 9.0217 | 10.5901 | 9.4798 | 8.5592 |

| UIQI | 1 | 0.9665 | 0.9715 | 0.9609 | 0.9688 | 0.9723 |

| ERGAS | 0 | 0.5842 | 0.5649 | 0.6608 | 0.5811 | 0.5163 |

| SAM | 0 | 0.7240 | 0.7766 | 0.7524 | 0.7004 | 0.6851 |

| MCC | 1 | 0.9964 | 0.9962 | 0.9960 | 0.9965 | 0.9962 |

| MUIQI | 1 | 0.9958 | 0.9956 | 0.9954 | 0.9954 | 0.9958 |

| Quality Indices | Ideal Value | Fusion Methods | ||||

|---|---|---|---|---|---|---|

| GS | PCA | AIHS | AWLP | Proposed | ||

| CC | 1 | 0.6072 | 0.4019 | 0.9308 | 0.9226 | 0.9326 |

| RMSE | 0 | 63.756 | 80.3908 | 28.3677 | 29.731 | 27.7250 |

| UIQI | 1 | 0.5959 | 0.3974 | 0.9258 | 0.9221 | 0.9317 |

| ERGAS | 0 | 4.9706 | 6.0991 | 2.1309 | 2.1918 | 2.0526 |

| SAM | 0 | 6.9835 | 9.4628 | 2.4487 | 2.4777 | 2.4206 |

| MCC | 1 | 0.7970 | 0.7454 | 0.9336 | 0.9347 | 0.9349 |

| MUIQI | 1 | 0.7159 | 0.6342 | 0.9036 | 0.9042 | 0.9054 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, X.; Li, J.; Shen, H.; Zhang, L.; Zhang, H. Pansharpening with a Guided Filter Based on Three-Layer Decomposition. Sensors 2016, 16, 1068. https://doi.org/10.3390/s16071068

Meng X, Li J, Shen H, Zhang L, Zhang H. Pansharpening with a Guided Filter Based on Three-Layer Decomposition. Sensors. 2016; 16(7):1068. https://doi.org/10.3390/s16071068

Chicago/Turabian StyleMeng, Xiangchao, Jie Li, Huanfeng Shen, Liangpei Zhang, and Hongyan Zhang. 2016. "Pansharpening with a Guided Filter Based on Three-Layer Decomposition" Sensors 16, no. 7: 1068. https://doi.org/10.3390/s16071068