Abstract

Geospatial information science (GI science) is concerned with the development and application of geodetic and information science methods for modeling, acquiring, sharing, managing, exploring, analyzing, synthesizing, visualizing, and evaluating data on spatio-temporal phenomena related to the Earth. As an interdisciplinary scientific discipline, it focuses on developing and adapting information technologies to understand processes on the Earth and human-place interactions, to detect and predict trends and patterns in the observed data, and to support decision making. The authors – members of DGK, the Geoinformatics division, as part of the Committee on Geodesy of the Bavarian Academy of Sciences and Humanities, representing geodetic research and university teaching in Germany – have prepared this paper as a means to point out future research questions and directions in geospatial information science. For the different facets of geospatial information science, the state of art is presented and underlined with mostly own case studies. The paper thus illustrates which contributions the German GI community makes and which research perspectives arise in geospatial information science. The paper further demonstrates that GI science, with its expertise in data acquisition and interpretation, information modeling and management, integration, decision support, visualization, and dissemination, can help solve many of the grand challenges facing society today and in the future.

Zusammenfassung

Geoinformationsforschung: Stand der Technik, Fallstudien und Zukunftsperspektiven. Die Geoinformationswissenschaft (GI-Wissenschaft) befasst sich mit der Entwicklung und Anwendung geodätischer und informationswissenschaftlicher Methoden zur Modellierung, Erfassung, gemeinsamen Nutzung, Verwaltung, Erkundung, Analyse, Synthese, Visualisierung und Bewertung von Daten über raum-zeitliche Phänomene im Zusammenhang mit der Erde. Als interdisziplinäre wissenschaftliche Disziplin konzentriert sie sich auf die Entwicklung und Anpassung von Informationstechnologien, um Prozesse auf der Erde und Interaktionen zwischen Mensch und Raum zu verstehen, Trends und Muster in den beobachteten Daten zu erkennen und vorherzusagen sowie die Entscheidungsfindung zu unterstützen. Die Autoren – Mitglieder der Abteilung Geoinformatik im Ausschuss für Geodäsie der Bayerischen Akademie der Wissenschaften, die die geodätische Forschung und die universitäre Lehre in Deutschland vertreten – haben diesen Beitrag erstellt, um zukünftige Forschungsfragen und -richtungen in der Geoinformationswissenschaft aufzuzeigen. Für die verschiedenen Facetten der GI-Wissenschaft wird der Stand der Technik dargestellt und mit meist eigenen Fallbeispielen untermauert. Der Aufsatz verdeutlicht damit, welche Beiträge die deutsche GI-Community leistet und welche Forschungsperspektiven sich in der GI-Wissenschaft ergeben. Darüber hinaus wird gezeigt, dass die GI-Wissenschaft mit ihrer Expertise in der Datenerfassung und -interpretation, der Informationsmodellierung und dem Informationsmanagement zur Lösung vieler der großen gesellschaftlichen Herausforderungen von heute und morgen beitragen kann.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Motivation

Geospatial information science (in German mostly called Geoinformatik) is concerned with the development and application of geodetic and computer science methods for the modeling and acquisition, exchange, management, exploration, analysis, synthesis, visualization, and evaluation of data concerning space–time-variant phenomena related to the Earth. As a scientific discipline, it focuses on developing and adapting information technologies to understand places and processes on the Earth, to support human behavior and decision making, and to detect and predict trends and patterns in the observed data. Geospatial information technologies contribute to the mapping and analysis of the Earth and the grand challenges facing human societies such as climate change, demographic change, sustainable agriculture and forestry, environmental degradation, energy security, resource efficiency, mobility.

Geospatial information processing integrates research issues and information products of many scientific disciplines, such as photogrammetry, remote sensing, cartography, engineering geodesy, and spatial observations in geography and environmental sciences, and transforms them into structured information as well as into maps and other communication media suitable for humans. To solve these tasks, besides concepts from mathematics and physics, various subareas of computer science are adapted and extended in an engineering-oriented manner, such as computational geometry, artificial intelligence and machine learning, semantic technologies, databases, and computer graphics (see also Kutterer et al. 2020).

Advances in sensor and positioning technologies in recent years have facilitated unprecedented growth in the collection of spatially and temporally referenced data. Classical examples of big spatial data sources include aerial and terrestrial laser scanning, remote sensing imagery, and weather data. In addition, gigantic amounts of (in many cases low-cost miniaturized) sensors generate real-time data streams in everyday life in the context of the Internet of Things (IoT) (for geospatial-IoT see Sect. 8). Millions of people voluntarily contribute to the collection of geoinformation (for example in OpenStreetMap (OSM) or in citizen science projects) or share posts with a spatial reference in the social networks and thus create so-called volunteered geographic information (VGI). Tracks of various objects moving by land, sea, and air will become more and more available in the context of digital earth, smart cities, humans as sensors, citizen science, location-based services (LBS),”in-situ” geocomputing, social media, etc. VGI data encompasses many specific types of data, such as geotagged social media (Twitter, Instagram, etc.), geotagged Wikipedia pages, news articles, historical archives, location-focused online reviews, geotagged housing posts, and others that contain links between locations and interpretable information.

Location is always an available and important common property of all of these new types of information. As Goodchild (2009) already stated: “it will be possible to know where everything is, at all times.” This will result in new research topics and challenges, as Andrienko et al. (2017) predicted: “The massive volumes of collected data contain complex, yet implicit spatial, temporal, and semantic interrelations that are waiting to be uncovered and made explicit.” Craglia et al. (2012) described the concept of the “Observation Web” with observations originating from humans, sensors or numerical (environmental) simulations, and others, moving from an essentially static representation of the Earth to one that is dynamic and interactive, and more responsive to the grand challenges (see Sect. 10).

With the advent of the IoT and big data, citizens have increasingly been involved in producing and using a new type of information useful for analyzing spatial problems, and researchers and practitioners increasingly have been confronted with the task of developing methods and approaches for massive data collection, integration, and analysis in spatially explicit formats (Malczewski and Jankowski 2020). The fundamental (i.e. special) properties of spatial data—spatial dependence, spatial heterogeneity, and concepts such as location, distance, direction, connectivity, adjacency, neighborhood, proximity—need to be rethought considering IoT and big data issues. New solutions for data storage and data processing, for example for semi-structured data, or technologies for parallelization and distributed computing are gaining importance. The problem of semantic heterogeneity caused by different meanings of data, terminologies, and models needs to be solved. The analysis of these heterogeneous data and the preparation of decision-supporting statements represent an important task for geoinformatics (Kutterer et al. 2020). The investigation of methods to validate these huge amounts of data against each other as well as against official authoritative data, knowledge bases, and simulation results is necessary. The integration of these geo-observation webs with common earth observation infrastructures, and the dynamic and interactive, as well as automatic exploitation of spatio-temporal mass data variety and flows from sensors and people is a future challenge.

In the context of mobility, data from permanently measuring sensors (e.g. floating car data) plays an increasingly important role alongside 3D city models. In smart cities, measures are to be derived and implemented automatically from sensor data and social and behavioral implications need to be taken into account (see also Huang et al. 2018).

The Committee on Geodesy of the Bavarian Academy of Sciences and Humanities (formerly Deutsche Geodätische Kommission, abbreviated "DGK", https://dgk.badw.de/) represents geodetic research and university teaching in Germany. The DGK division “Geoinformatik” (https://dgk.badw.de/abteilung-geoinformatik.html) initiates and coordinates research projects, discusses future trends and scientific perspectives in geospatial information science, and maintains professional exchange. The division focuses on topics such as the acquisition of geospatial data and the derivation of digital descriptions of environmental objects at different scales and as fully automated as possible. The aim is not only to record geometric properties but also to provide descriptions of a range of different object properties (e.g. building function, terrain shapes, movement patterns, land use types, human behavior) and temporal information by automated merging and integration of geodata from different sources. The development of common data models to create (semantic) reference systems and the establishment of modern geodata infrastructures based on spatial information theory is another topic of DGK. The digital geodata collections that are emerging in large numbers and growing in size with modern sensor technologies require automatic spatial analysis methods, for example, to derive heat and energy losses in construction planning, to recognize movement patterns in mobility, or to determine damages for disaster management.

Members of the DGK division “Geoinformatik” have prepared this paper to assess the current situation and to point out future research questions and directions in geospatial science, building upon previous efforts by ISPRS (International Society for Photogrammetry and Remote Sensing) (Chen et al. 2016), ICA (International Cartographic Association) (Virrantaus et al. 2009; Meng et al. 2020), and others. We identify the scientific research challenges for the next decade and describe how we will contribute our expertise along the whole information processing chain in GI technology to tackle them.

The structure of the article is as follows: Sects. 2, 3, 4, 5, 6, 7, 8 treat research aspects of GI science in information acquisition and geometric processing, information interpretation, information modeling and management, information integration, decision support, geospatial visualization, and information dissemination. Each step along this processing chain is reflected with a brief description of the status quo and underlined by a few selected case studies, mainly from the authors. Then, we look ahead to the most important research trends of the next decade. Section 9 briefly considers relevant infrastructures and research funding to support these research issues. Finally, we address the grand challenges, from the point of view of geospatial information processing, to reach a certain consensus in the scientific community about the contributions of the GI community to these major societal challenges.

2 Geospatial information acquisition and geometric processing

2.1 Developments in data acquisition and new applications

Recent developments in sensor technology have led to a tremendous increase in spatially and temporally referenced data. Examples include aerial, satellite, but also terrestrial images, 3D point clouds from laser scanners and interferometric synthetic aperture radar (InSAR), trajectory data using e.g. portable GNSS receivers, and volunteered geographic information. Sensors are mounted on satellites, airplanes, unmanned aerial vehicles (UAVs), and static as well as mobile terrestrial platforms such as mobile mapping systems. Increasingly, multisensor systems and geosensor networks are being used for cooperative data acquisition.

In this section, we give a short description of developments in geospatial data acquisition. Since our focus is on data handling and not on sensor hardware development, we only point out major trends, without going into detail, primarily to illustrate the fact that geospatial data are big data—with the related challenges of an enormous increase in volume, variety, velocity, and veracity. In addition, while we note the tremendous importance of ubiquitous location and localization (according to Goodchild, 2019 “it will be possible to know where everything is, at all times”) we will not discuss developments of how to determine sensor position for the sakes of positioning and navigation in this section, but only to georeference geospatial data captured by the sensor in question.

Over the years, many national and supranational agencies, e.g., NASA, ESA, CNES, DLR, ISRO, JAXA, CNSA, developed civil satellite remote sensing systems. The US Landsat program, dating back to 1972, was the first source of seamless optical Earth Observation (EO) imagery suitable for certain resource mapping purposes. Since 2014, the European Copernicus program (Sentinel fleet) provides free-of-charge multispectral imagery with a very high revisit frequency and a ground sampling distance (GSD) of up to 10 m; it will continue to do so for the next decades. Other countries such as India and China have similar programs. In addition, constellations like the Pléiades Neo satellites with a GSD of 0,3 m (the first two of which were launched in April and August 2021), and the Planet constellation with more than a hundred operational satellites in orbit with a GSD in the meter range, are currently being launched, partly by private companies. Some of these satellites can capture motion in short high-resolution videos. The meter range is also the resolution of currently established radar constellations, most of which, however, seems to target the defense market (e.g., Iceye, Umbra Lab, Capella Space). For all these constellations the main goal is a high temporal resolution. In addition to the constellations, new high-resolution optical satellites have been announced, e.g., Maxar Legion with a resolution in the range of a quarter of a meter and up to 15 images of the same location per day, which increasingly blurs the separation between satellite and aerial imagery. Novel SAR missions particularly suitable for SAR interferometry like TanDEM-L (https://www.dlr.de/hr/tdml) as well as hyperspectral sensors such as the recently launched EnMAP (https://www.enmap.org/) will produce more data.

In aerial data acquisition, one observes an ongoing integration of nadir-looking sensors with image sizes of up to 400 Megapixel using oblique cameras, and partly also with laser scanners leading to colored 3D point clouds. Current laser scanning systems increasingly employ full-waveform digitization or single-photon counting techniques (Mandlburger et al. 2019), enabling them, for instance, to generate vertical vegetation density profiles in forests as a basis for biomass change quantification and to capture terrain model data at a rate of several million points per second. Aerial radar for civil applications is still a niche market.

While for a long time unmanned aerial vehicles in most cases only carried cameras, recently, laser scanners with sufficient range have become smaller and lighter and, most importantly, have been integrated with sufficiently high-precision lightweight GNSS and INS sensors. In addition, thermal and hyperspectral sensors for UAVs are available, although the geometric resolution is still limited. Concerning the availability of suitable carrier systems, one has to note that the (semi)-professional area is growing rapidly, e.g., for taking video footage of sports events or for surveillance purposes.

For several years there has been a trend towards Computational Photography (CP, Nayar 2007) in terrestrial data acquisition. These cameras often have multiple lenses: images are taken with different settings and are combined computationally. An example is plenoptic cameras, also called light-field cameras, the concept of which has been investigated for some time already (Adelson et al. 1992). Computational photography is particularly being employed in recent mobile phones. High-speed cameras at frequencies, ranging up to 1 MHz and above, are used to acquire dynamic scenes in areas such as sports, traffic monitoring, surveillance, robotics, autonomous driving, crash tests, material testing, and fluid dynamics. For terrestrial sensing the same thermal and hyperspectral sensors can be used as those mounted on UAVs, meaning that the geometric resolution is rather limited. In many cases, sensors are combined into a multisensor platform with various different cameras, laser scanners, and positioning devices carried by humans or cars; mobile mapping systems traveling in normal traffic are a particular example of this development. While mobile mapping has become a standard tool for the detailed acquisition of urban areas, the scope has been considerably widened by the interest in HD (High Definition) maps for autonomous driving. In this context, the automatic acquisition of information about the surroundings of a vehicle using sensors such as (stereo-) cameras and laser scanners is of vital importance.

For moving objects, trajectory data (3D position and 3D rotation as a function of time) are an additional data source. They are typically captured using GNSS receivers and GNSS/IMU systems, but also using cameras or laser scanners. Trajectories can be used to analyze and predict object behavior. They can also serve as an additional information source for the interpretation of scenes in which these objects are depicted, e.g. using a camera or laser scanner.

Given all these developments in data acquisition, one can expect more detail concerning geometry, semantics, extent and time in the future. For instance, city models will be fully 3D and will contain window and door objects for the façades, possibly with mullions and transoms (horizontal and vertical bars), but also objects for stairs, balconies, dormers, chimneys, air conditioners, and more generally, street furniture and vegetation objects. Data from all platforms mentioned before will be fused and used in combined approaches to reach these goals.

Reconstruction for Building Information Models (BIMs) (see Sect. 4) goes further by considering, besides the interior of buildings, the semantics and the geometry of the parts they are constructed of, and possibly, also the construction process. Models of interior spaces will become common for shopping centers, but also for large public buildings such as railway stations, town halls, or museums. Smooth navigation from indoors to outdoors and vice-versa is a particular problem in this regard.

In addition to the more detailed geometry and semantics, scenes to be observed and models to describe these varying scenes will be more and more dynamic, opening up possibilities to also model, observe and understand processes of all kinds, e.g. landslides and other geomorphologic changes on the Earth surface, but also in sports or when observing public places. This pertains to the interior spaces of shopping malls but even more so to information related to traffic and other applications in which image sequences are commonly used.

Land use is another area where temporal dynamics are important. Utilizing the capabilities of the satellite constellations, a much higher acquisition frequency and, thus, a much more detailed analysis of the temporal dynamics is possible, also leading to higher update rates. Such results are required not only by agriculture and forestry but in particular, also for determining indicators to monitor progress in achieving the UN Sustainable Development Goals (SDG, https://sdgs.un.org/goals). Concerning land use, additional radar missions will be helpful, particularly for areas that are often clouded such as rain forests. In addition, advanced radar capabilities will be particularly useful for surveillance applications where all-weather capabilities are essential and higher geometric and temporal resolutions allow for a more detailed analysis.

An emerging field is a perception of autonomous driving. Besides ego-motion, the detection and pose estimation of other road users such as cars, cyclists, and pedestrians are essential for path planning and accident avoidance and support collaborative positioning of vehicles in GNSS-denied areas (Coenen and Rottensteiner 2019). It is also important to generate this information over time by analyzing time series, which involves tracking based on physical models of the movement of these objects over time, potentially considering interactions between different road users in a scene (Leal-Taixé et al. 2017).

2.2 State of the Art and Case Studies in Geometric Processing

Geometric processing comprises calibration and synchronization of single sensors and sensor systems, the determination of sensor orientation (pose), the determination of (potentially highly accurate) 3D point coordinates, the 3D reconstruction of scenes, and object rendering including orthoprojection. In our context, we also consider motion models, both for sensor movement (e.g. when dealing with line sensors) and for temporal changes in object space (e.g., when determining scene flow), as part of geometric processing. Note that the determination of ego-motion, as a core requirement in navigation (e.g., using GNSS/IMU systems as the only sensor), is not treated in this section.

Optical 3D measurement techniques (in the past often referred to as close-range photogrammetry) have found a huge market potential in industrial measurement tasks, where they are applied in manifold design, manufacturing, and quality control processes. Herein, photogrammetry provides, among others, sensor modeling and self-calibration techniques allowing to achieve measurement accuracies beyond 1:100,000 of the object dimensions using off-the-shelf cameras. Image engineering techniques such as structured light approaches allow for real-time 3D measurement systems with high spatial and temporal resolution.

2.2.1 Case Study “3D Surface Reconstruction Using Images from the Ground and UAVs”

Images from different sources (see Fig. 1) such as from the ground and small UAVs allow for a detailed 3D reconstruction of the roof as well as the façades of buildings. Michelini and Mayer (2020) present an approach for the automatic orientation of unsorted images which can deal with the wide baselines occurring in this study. The orientations are the basis for pairwise image matching leading to per-pixel depth maps. These maps are combined based on their estimated accuracy using a probabilistic volumetric approach (Kuhn et al. 2017) leading to a scalable high-quality 3D reconstruction.

2.2.2 Case Study “Uncertainty Estimation of 3D Surfaces”

While dense stereo matching methods have made great progress over the last few years, there is a need for self-diagnosis, i.e. to identify erroneous disparity estimates in the results. Based on probabilistic convolutional neural networks, Mehltretter (2021) presents a new method for the estimation of aleatoric and epistemic uncertainty (corresponding to stochastic and systematic uncertainty). Instead of relying on features learned from disparity maps only, the corresponding 3D cost volumes are employed. For aleatoric uncertainty estimation, a novel convolutional neural network architecture is presented that is trained with different stochastic models that follow the concept of Bayesian deep learning. The quantification of epistemic uncertainty is realized using a Bayesian neural network trained with variational inference. Figure 2 shows the design of the employed network. The results demonstrate that the models used to estimate aleatoric uncertainty outperform state-of-the-art methods. Moreover, the usage of a Bayesian neural network not only allows for epistemic uncertainty estimation but also supports the task of dense stereo matching itself, reducing the number of errors contained in the disparity maps.

Deep learning network for the joint estimation of aleatoric and epistemic uncertainty in dense stereo matching (Mehltretter 2021)

2.2.3 Case Study “Material Testing”

The detection of cracks in probes and the quantitative determination of crack patterns is an important task in material testing. Compared to conventional techniques such as inductive displacement transducers, inclinometers, and strain gauges, which deliver only pointwise measurements, cameras offer the crucial advantage of allowing simultaneous measurements at many locations in an image. Cracks can be detected in monocular image sequences by applying a cascaded image sequence processing chain (Hampel and Maas 2009). Herein, a dense pattern of feature points is tracked by least-squares image matching, yielding subpixel accuracy motion vector fields. These vector fields are analyzed for significant discrepancies, depicting locations of cracks. Metric crack width information can be derived from the discrepancies by a thorough geometric analysis of triangle meshes with the matching points as vertices (Liebold and Maas 2020). The technique can detect cracks with a width in the order of a tenth of a pixel, and it delivers full-field measurements of complete complex crack patterns (see Fig. 3).

Crack pattern of a strain-hardening cement-based composite probe in a tension test, visualized by color-coding and height of the prism cells for better visual interpretability (Liebold and Maas, 2020)

The spatial resolution of the technique is mainly defined by the sensor size of the camera, allowing for the detection of complex crack patterns with ca. 100 cracks when using a high-resolution camera. The temporal resolution may reach 100 kHz and beyond. Liebold et al. (2020) applied the technique for the determination of crack propagation velocities in a concrete probe. Image sequences were taken by a high-speed camera at a frame rate of 160,000 images per second. By applying the cascaded image sequence processing procedure to these datasets, crack propagation velocities of about 800 m/s could be determined with a precision in the order of 50 m/s.

A logical future extension of the crack detection and crack characterization techniques shown here is in a transition from 2D image sequences to multi-temporal 3D micro-tomography data. As soon as suitable instruments are available, tomography voxel data acquisition may be integrated into the material testing process.

2.3 Research Perspectives

This section focuses on problems for which the process of solving a problem and finding a solution is not only difficult but also conceptually unclear. This is true for images produced by computational photography, which are usually generated for visual inspection by black-box algorithms. It is currently not known how and even if they can be used for higher accuracy photogrammetric reconstruction, especially if different viewpoints are used. Another challenge is the combination of low-resolution data such as hyperspectral images with high-resolution data in the form of images or 3D point clouds. This is already done for satellite data, but much more high-resolution data from UAVs or the ground lead to additional complications due to the detailed 3D geometry with the associated occlusions.

While traditional flight planning for aerial acquisition is based on regular structures such as grids, a number of challenges come with automating detailed 3D acquisition by means of UAVs in urban areas: as data are more and more acquired in fully autonomous mode, they can be processed in real-time and on-board, opening up possibilities for a more flexible, yet physically feasible, planning of flight paths considering obstacle avoidance, and for online checking of the completeness of the resulting 3D models despite occlusions, incl. instantaneous acquisition of missing image data. A related topic is that of parallel geometric processing, e.g. for bundle adjustment (Mayer 2019).

A particular challenge arises in the overlap between photogrammetry and robotics, i.e., when results are required in real-time, e.g., for docking maneuvers, in autonomous driving, in traffic monitoring, or surveillance. Here, cooperative approaches (Molina et al. 2017; Schön et al. 2018) and swarm processing are considered promising.

Finally, finding robust solutions from image and sensor orientation, as well as dense 3D reconstruction, also as a function of time, remains difficult in scenes with poor or repetitive texture, critical geometric configurations, and/or large depth discontinuities. Examples for challenges in image orientation include the determination of image overlap, e.g., for image sets downloaded from the internet, images taken with a significant temporal difference, or in different parts of the electromagnetic spectrum (e.g., optical and thermal data). Progress has been made for orientation (Kendall et al. 2015) as well as 3D reconstruction (Kendall et al. 2017) by learning matching functions for points (Jin et al. 2021) and shape priors for surfaces. One can expect that the separation between geometry and interpretation (discussed in the next section) will be slowly eroding and at least implicitly more and more information about the specific situation and, therefore, semantics will be included in the up-to-now purely geometric part of photogrammetric image processing. This could be particularly helpful for dynamic scenes and very different viewing directions, whereas for fast-moving objects it might be difficult to track and/or match individual points or patches due to distortions and (self-)occlusions while the objects stay the same. In this respect, also the introduction of motion models to regularize the solution will be helpful.

3 Geospatial Information Interpretation

3.1 State of the Art and Case Studies

The core task of automating interpretation lies in the mathematical modeling of topographic or, more generally speaking, scene information, as well as its behavior over time, in combination with its appearance in the data. Topographic information comprises objects such as buildings, roads, and vegetation but also broader categories like land use. Considering other applications, all kinds of objects such as cars, bicycles, pipelines in industrial plants, animals, and persons are of concern. Data range from color to thermal and hyperspectral images to LiDAR and Radar acquired by any of the platforms mentioned before.

While object and scene knowledge necessary for automatic interpretation has been directly encoded by humans for a long time, in recent years there is a strong tendency towards employing methods based on learning by examples. It should be noted that in satellite image classification such machine learning approaches have been used from the beginning; the examples to learn from are training data, which encode the main body of object and scene knowledge, albeit in implicit form. The main advantage of this strategy is that by providing new training samples these methods can be transferred to new datasets or new geographic areas relatively easily.

Concerning methodologies and strategies for learning, there is a strong trend from traditional statistical methods, particularly, graphical models such as Markov Random Fields (MRF) and Conditional Random Fields (CRF), which are now often only used for post-processing, towards all kinds of neural networks. Having been initiated by the large success of convolutional neural networks (CNN) for classifying images just showing one object of relevance (Krizhevsky et al. 2012), they have also been demonstrated to outperform other classifiers in remote sensing applications, partly by a large margin, if a sufficient amount of representative training data is available (Zhu et al. 2017b).

For data with a regular topology such as images, different variants of CNN have been devised, leading to strongly improved results for certain areas. Multiple general ways to improve learning and, particularly, to make better use of the training data have been proposed. For instance, one can focus the acquisition of training data with reinforcement learning, making clear where deficits exist in the results. While this can be efficient, the success depends on the availability of the human operator generating additional intended results, and such approaches are hard to benchmark as the performance can always be improved by another round of training.

3.1.1 Case Study “Generation of LoD3 3D Shell Model”

Images from the ground and small UAVs, as well as the derived orientations and 3D mesh (see Fig. 1), allow for a consistent reconstruction of whole buildings on the Level of Detail 3 (LoD3): The roof is reconstructed from images from the UAV and the façades including windows and doors from images from the ground. The shell model (Huang et al. 2019) consists of surfaces with a thickness and integrates the roof including overhang and the façades semantically and geometrically (see Fig. 4). This description is derived from the 3D mesh; windows and doors are added as holes. The former is determined from images from the ground projected on the façade planes using a CNN. While the 3D mesh consists of half a gigabyte of data, the shell model just needs a couple of tens of kilobytes.

Untextured 3D mesh model (left; see also Fig. 1) and derived LoD3 shell model with roof overhang and holes for windows and doors (right)

3.1.2 Case study “Scene Flow”

Scene flow estimation provides valuable information about the dynamic nature of our 3D environment. In particular, the 3D scene flow field comprises all 3D motion vectors of a densely reconstructed 3D surface model, which is moving with respect to the camera. In their method termed object scene flow, Menze et al. (2018) propose a unified random field model which reasons jointly about 3D scene flow as well as the location, shape, and motion of vehicles in the observed scene. The problem is formulated as the task of decomposing the scene into a small number of rigidly moving objects with attached motion parameters. The inference algorithm then estimates the association of image segments and object hypotheses together with their 3D shape and motion. Figure 5 depicts a sample result of the work.

Result for object scene flow (Menze et al. 2018). The reference image is superimposed with optical flow results and the reconstructed objects

3.1.3 Case Study “Domain Adaptation for Image Classification”

Wittich and Rottensteiner (2021) address domain adaptation for the pixel-wise classification of remotely sensed data using CNNs as a strategy to reduce the requirements with respect to the availability of training data. The method is based on adversarial training of an appearance adaptation network (AAN) that transforms images from the source domain such that they look like images from the target domain. A joint training strategy is proposed for the AAN and the classifier, which constrains the AAN to transform the images such that they are correctly classified. A specific regularization term for the discriminator network required for adversarial training helps to prevent the AAN from learning trivial solutions. Using high-resolution digital orthophotos and height data the method on average improved the performance in the target domain by 4.3% in mean F1 score. Sample results of this method are shown in Fig. 6.

Result of deep domain adaptation using the method of (Wittich and Rottensteiner 2021). From left to right: Classification of source domain image, source domain image, source images transformed to the target domain by the AAN, target domain image, classification of target domain image

3.1.4 Case Study “Urban Tree Recognition in Airborne Laser Scanning Point Clouds with Deep 3D Single-Shot Detectors”

To apply CNN for airborne laser scanning (ALS) data processing, often in the first step the irregularly spaced 3D point coordinates and their features are mapped into a voxel grid. However, usually, large parts of the volume are occupied by free space. In addition, due to the sensor principle, ALS data are restricted to capture echoes of those object boundaries which can be reached by the laser signal, whereas the interior volume cannot be accessed. Consequently, such a voxel space is in general only sparsely populated. Therefore, standard convolution schemes are not efficient and lead to a blurring of details, because each 3D point is spread by the filter’s impulse response. Remedy are so-called 3D submanifold sparse convolutional networks that avoid such undesired widening by omitting certain parts of the input (Graham et al. 2018). Schmohl et al. 2021 use such a network as a sparse 3D backbone for feature extraction in a framework tailored to detect single urban trees (see Fig. 7). This vegetation class enjoys steadily rising interest, for example, for purposes like improvement of locale climate or enrichment of biodiversity. Unfortunately, official tree cadasters are often limited to the public ground only, ignoring the large share of urban trees in backyards and private gardens. To detect as many trees as possible, the mentioned 3D backbone is followed by a detection approach, which eventually delivers the height and crown diameter of individual trees.

Deep learning network for scene classification and subsequent single tree detection (Schmohl et al. 2021)

3.2 Research Perspectives

Geospatial data interpretation has recently profited significantly from artificial intelligence methods in general, and deep learning methods in particular. This development has opened up unprecedented possibilities for new applications, e.g. in combining human interaction and computing performance for active learning, in fusing drastically different images such as terrestrial and aerial views and integrating deterministic physical models with neural networks (Tuia et al. 2021). At the same time, several problems which await sound solutions have become apparent.

A general issue is that the internal decision-making process of deep learning methods is not well understood; they behave very much like black boxes, and there is no theoretically sound strategy for designing deep learning methods for specific applications; instead, mostly adhoc design methods are employed today, starting from some existing architecture that has proven to work well for similar problems. How to explain the behavior of deep learning methods is, thus, an important research question (Roscher et al. 2020).

Another question is how to infer causality from correlation (Pérez-Suay and Camps-Valls 2019). The challenge consists of combining advanced learning methodologies and strategies with a good understanding of the objects, spatial and semantic relations as well as interactions over time, to provide a capable but also ideally provably robust system for interpretation. The problem is aggravated in remote sensing applications due to the large variability of sensors that are used.

A major challenge for the approaches based on learning by example is to provide knowledge via training data, which is suitable for the problem. To improve the generalization capabilities, one has to make sure that the major aspects necessary for interpretation are included in the training data, e.g., the complete spectrum of objects to be interpreted. This all means that there is a new basic challenge: How can one provide training data which contains the relevant variation of the objects and their interactions?

There are large databases of annotated terrestrial RGB images such as ImageNet (Russakovsky et al. 2015) which form a good basis for training classifiers that generalize well for a large variety of applications but are restricted to the object types shown in these images and to the type of sensor used for acquiring them. No datasets of a comparable size exist for remote sensing data, let alone for sensors such as airborne laser scanners or hyperspectral cameras. A new ISPRS benchmark sheds some light on this matter (https://ifpwww.ifp.uni-stuttgart.de/benchmark/hessigheim/default.aspx).

Recent research, therefore, focuses on making better use of self-supervising approaches. A particularly promising way is to employ generative adversarial networks (GANs) for the generation of realistic training data to extend the coverage of the natural variations of objects or scenes. GANs can also be used to learn from non-matching input and output. For instance, mappings between maps and images of the same region can be learned without one-to-one relations.

In particular, in topographic applications, one can make use of existing maps to generate the training labels for new images. In this context, the training technique has to cope with erroneous labels (e.g., due to changes in land cover), so-called “label noise”. Kaiser et al. 2017 have shown that using large amounts of such partly wrong training samples can reduce the requirements for high-quality hand-labeled data in training a CNN. For label noise, robust methods for training can provide good results even without any hand-labeled data, which has been shown for random forest classifiers (Maas et al. 2019). These principles are currently being transferred to the domain of deep learning (Voelsen et al. 2021).

Another very promising strategy is multitask learning (Kendall et al. 2018), where several tasks of possibly different complexity can support each other. In transfer learning and domain adaptation, one learns from previous applications, even when these are only weakly related (Tuia et al. 2016); one of the challenges is to understand how similar the different domains must be for a successful application of domain adaptation, and how this similarity can be measured. In this context, GANs can also be applied to learn a representation of the images so that the resultant features have similar distributions for images from different domains (Wittich and Rottensteiner 2019), or to learn how to adapt the appearance of images from one domain so that they look like images from the other domain (Wittich and Rottensteiner 2021; cf. Sect. 3.1).

The availability of satellite data with high revisit times, e.g. Sentinel-2, has triggered interest in the processing of time series. In this context, recurrent neural networks (RNN) have been used, e.g. for building detection (Maggiori et al. 2017). Long short-term memory (LSTM) architectures were developed to avoid common problems in training, e.g. vanishing gradients (Hochreiter and Schmidhuber 1997), and they have been adopted for the classification of remote sensing data from multiple epochs, e.g. for change detection (Mou et al. 2019) or for crop classification based on time series (Rußwurm and Körner 2018). An alternative is to employ self-attention mechanisms, typically embedded in transformer architectures (Vaswani et al. 2017), which is a more general representation than convolutional network layers (Cordonnier et al. 2020). Such architectures can help to suppress irrelevant observations in time series, as was shown by Rußwurm and Körner (2020): They also reach competitive results in other computer vision tasks (Dosovitskiy et al. 2021; Liu et al. 2022). The achievable geometric accuracy and the amount of supervision required for learning such models, in particular if a pixel-wise classification is to be determined for every time step, still seems to be unclear.

An additional basic question consists in how far geometric and physical modeling is still appropriate. In principle, everything could be learned by just linking the acquired input data to the intended result. While this could be helpful in certain areas with a weak understanding of the problem, such as how to model a tree as seen from the ground with different levels of mutually occluding branches and leaves with a very complex reflection function, experience shows that using proven models as priors tends to improve and stabilize results (see Tuia et al. 2021 and the references therein for examples).

It also has to be noted that learning approaches focus on a semantic interpretation of the input data, but in general do not aim at a 3D reconstruction of the geometric shape of the objects. Some recent examples have tried to support 3D reconstruction by machine learning, e.g., for roof reconstruction (Wichmann et al. 2018) or cars (Coenen and Rottensteiner 2019). It is anticipated that this common treatment of semantics and geometry will increasingly be used and will yield better results.

Similar arguments hold true for dynamic scenes. A general question relates to the usefulness of explicit motion models for objects to be detected and tracked, incl. models for object behavior, which are necessary to predict future situations, e.g. in traffic scenarios, and the interaction between different objects. Also, it is unclear, what the benefits are of modeling not only the behavior of individual objects but the whole scene, in general, as a function of time.

In contrast to raster images, 3D point clouds are irregularly distributed spatial data. To extract implicit neighborhood relations for each point, usually, local features are calculated to enable subsequent segmentation or classification (Weinmann et al. 2015). In addition, for point clouds, substantial progress has been achieved based on deep learning (Griffith and Boehm 2019) employing one of the following processing strategies: (i) use of hand-crafted features in a single- or multi-branch 1D CNN, (ii) projection of the point cloud onto planes which are fed into a standard 2D CNN for images, and (iii) discretization of the point cloud to 3D voxel space, where 3D convolutions take place. A general bottleneck is the lack of sufficient labeled ground truth data for training and validation of such approaches. Furthermore, there is also progress for neuronal networks based on general graph structures (Nassar et al. 2020), also for the direct segmentation of 3D point clouds.

Finally, it will always be necessary to optimize functions. While classical least-squares adjustment or Kalman and Particle Filters allow for a high precision, sampling strategies such as RANdom SAmple Consensus (RANSAC) or Markov Chain Monte Carlo (MCMC) and their many variations are more robust but need to be chosen in a way reflecting the error structure of the problem. The training of CNNs requires the minimization of a loss function. For that purpose, variants of stochastic gradient descent are usually applied. The impact of the chosen optimization procedure on the results has hardly been investigated so far. More work has been spent on designing task-specific loss functions to be optimized, but problems remain, for instance, the way to deal with extremely unbalanced class distributions of the training data, which frequently occur in land cover classification or the development and application of appropriate strategies for regularization to avoid overfitting. Finally, the question arises if and how a combination of robust sampling strategies and CNNs could lead to considerably more robust approaches for automatic interpretation.

4 Geospatial information modeling and management

Goodchild (2009) already formulated the goal “imagine the possibility of a world of real-time geographic information”. Today, due to IT and sensor developments during the last decade, we are on the way towards realizing this vision. The challenges presented in the former sections and their consequences to geospatial information modeling and management – driven by expected more data and extremely detailed representations concerning geometry, semantics, extent and time for all kinds of sensors – will lead to new challenges for information modeling and management. In the coming decade, geospatial information modeling and management will have to be adapted to these new requirements.

4.1 State of the Art

A common approach in geographic data science is the integration of multiple data sets characterized by different spatial and temporal references, at multiple scales and resolutions (Andrienko et al. 2017). This means that information integration is a central issue bringing together different “dimensions” such as space dimension (2D, 2.5D, full 3D objects: maps and digital terrain models as well as full 3D geological subsurface models and full 3D city models), spatial scale (resolution), and temporal scale (resolution). We will focus on different levels of information integration in Sect. 5. Before integrating data, however, data has to be modeled and managed in a formalized way. A typical example, showing two different ways of spatial data modeling which have been established for decades, are the domains of geographic information systems and Computer Aided Design (CAD). In the following, these are generally referred to as geospatial information modeling (GIM) and Building Information Modeling.

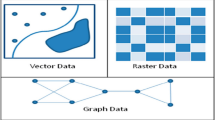

Geospatial information modeling denotes the digital modeling method for space-related phenomena of the real world. It is characterized by multidimensional descriptions of geospatial features by location and orientation in a spatial reference system (SRS), object model, and field model (implemented as vector and raster data formats), and has been extended to spatio-temporal models (Erwig et al. 1999; Güting and Schneider 2005). Seen from a historical point of view, in GIM geometric, topologic, and semantic modeling of objects have always been treated as one unit. GIM is used as digital documentation of real-world states and can be applied to a variety of spatially related questions (Herle et al. 2020). Due to the rapid development of information and communication technology as well as advances in the methods and degrees of automation of data acquisition, it has become possible to capture geodata in their 3D or 4D extent and thus to create a very realistic virtual image of the real world in digital form.

Organizations such as the International Organization of Standardization (ISO), with the ISO 191xx family, and the Open Geospatial Consortium (OGC), promote standards for better interoperability, including data models (from simple feature model to CityGML, IndoorGML, Land Administration Domain Model (LADM), and Land and Infrastructure Conceptual Model (LandInfra)), exchange formats and languages (such as Geography Markup Language GML) and service specifications (the OGC Web Services such as Web Map Service (WMS), Web Feature Service (WFS), Web Processing Service (WPS), etc.). The resource-centric OGC API family of standards are being developed to make it easy, especially for machines as well as for anyone, to provide geospatial data to the web.

GIS research, development, and implementation are largely based on CityGML, first adopted as version 1 in 2008 and recently released as version 3.0 (https://docs.ogc.org/is/20-010/20-010.html) in September 2021. CityGML 3.0 is an OGC international standard for modeling, storing, and exchanging semantic 3D city models. In CityGML, buildings, terrain, vegetation, street furniture, urban object groups, water bodies, roads, tunnels, bridges, and land use can be represented semantically and geometrically, with the construction module being new (see Fig. 8). In addition to these eleven thematic modules, six modules are defined that are applicable to all thematic modules. Besides the CityGMLCore, Appearance and Generics, which were already available in earlier CityGML versions, the modules Dynamizer, Versioning and PointCloud have been added.

CityGML 3.0 module overview. The vertical boxes show the different thematic modules. Horizontal modules specify concepts that are applicable to all thematic modules (Kutzner et al. 2020)

The conceptual schema of CityGML specifies how and into which parts and pieces physical objects of the real world should be decomposed and classified. All objects can be represented with their semantics, 3D geometry, 3D topology, and appearances information. The objects can further be represented using five predefined levels of details (LoD 0–4 with increasing accuracy and structural complexity). The relevant city objects are defined using the Unified Modeling Language (UML); CityGML 3.0 GML Encodings specify an XML schema for the file exchange format. With the construction of large statewide or municipal city models, CityGML has found an important role in the orchestra of OGC standards and is a good base for GI research and development.

BIM and GIM technologies have different origins and come from different domains. In civil engineering and architecture, a shift from purely constructional data handled by computer-aided design software towards BIM is currently taking place. This means that the realization of the joint modeling of geometry and semantics has arrived in BIM two decades later than in GIM, still not considering topology as an independent concept besides geometry. BIM (using Industry Foundation Class (IFC) (ISO 16739 2013) by OpenBIM) supports consistent and integrated modeling of all data for specific construction and during its life cycle (Borrmann et al. 2015). BIM and GIM modeling views are complementary to each other. BIM geometry modeling is based on constructed solid geometry (CSG) (Mäntylä 1988), GIM – for instance, CityGML as one of the most relevant standards of OGC for city models—uses boundary representations (B-Rep). Whereas BIM is applied in planning processes for constructing buildings and other structures, GIM is used to model geospatial features of the real world with an application-dependent accuracy (Fig. 9). GIM usually is applied for applications such as heat spread modeling in cities whereas BIM focuses on applications such as facility or energy (heating/cooling) simulations in single buildings which can be up-scaled to urban scale (Geiger et al. 2019). Both concepts are mature and are applied by various industries. Different approaches are investigated to achieve interoperability between GIM and BIM models (Herle et al. 2020).

Information modeling also deals with the development of ontologies at different levels of generality and formality, tailored to various needs and uses (Kokla and Guilbert 2020). Kuhn (2005) defined geospatial semantics as “understanding GIS contents, and capturing this understanding in formal theories.” Geospatial semantic modeling and ontologies refer to the meaning of things (Hu 2018).

In the realm of spatial data, linked data and GIS are two separate paradigms, representing different approaches for data representation and exchange. Recently, the volume of data with spatial reference in the Linked Open Data (LOD) cloud has been on the rise. Whereas in GIS the main focus is on data for analysis by humans, LOD is structuring information in a format meant for machines. If all datasets were openly published and used the same format for structuring information, it would be possible to interrogate all of these datasets at once. Analyzing huge volumes of data is potentially much more powerful than everyone using their datasets dotted around the web in what is known as information silos. These interoperable datasets are what LOD practitioners are working towards (Iwaniack et al. 2016). Time and space referencing are the simplest methods for structuring such data and providing the context for interpretation. This is one of the reasons for perceiving linked open data as one of the most important approaches for geographic information publication and consumption on the web. It provides new means for sharing, accessing, and integrating geoinformation and holds a promise of changing ways, in which GI developers and analysts solve their problems.

According to Andrienko et al. (2017) “one of the most challenging problems in geographic data science is the need to assess the data quality, suitability, and distribution of the data available for analysis.” The heterogeneity of the real world, different technologies for data acquisition and processing, database management tools and platforms lead to a large amount of duplicated, inconsistent, ambiguous, and incomplete spatial data. Thus, spatial data quality and uncertainty is an increasingly important issue in geographic information science. Uncertainty and data quality modeling is an unavoidable part of spatial data due to an approximation of real-world phenomena. The influence of uncertainty may be visible in the form of original data and measurement, assumptions, or in the model structure (Bielecka and Burek 2019).

To allow persistent use of data and objects modeled according to GIM and BIM, geospatial information is maintained in spatial database management systems (SDBMS). SDBMS are optimized to store and query data that represent objects defined in a geometric space. They define special data types for geometric objects and allow geometric data (usually of a geographic nature) to be stored either in regular database tables or in non-tabular databases. Besides spatial data types, SDBMS provide special functions and indexes for efficient querying (Güting 1994) and manipulating that data using declarative query languages such as the Structured Query Language (SQL) or others. In the beginning, attribute data were stored separately from the geometry in relational database management systems (RDBMS). With the emergence of object-oriented thinking, complex data types and relations between geometry and topology as well as attributes were treated in object-oriented database management systems (OODBMS) (Balovnev et al. 2004). Object-relational database management systems (ORDBMS) combine both approaches, the relational world, and the object world, and are standard today (Thirunavukkarasu and Wadhwa 2016). These spatial database management systems support at a minimum complex data types, spatial data within related tables—feature classes, feature datasets, validation rules—subtypes and domains, spatial metadata, spatial reference systems and transformations, topologies and methods for analyzing spatial relationships, a spatial query language for query and search, and spatial indexing to improve the performance (Jitkajornwanich et al. 2020).

More types of databases are approaching the market for specific purposes, which are also of interest for geospatial problems: NoSQL databases (not only SQL—more than tables, document-oriented, graph databases) and XML databases (semi-structured data described in XML) are used in many disciplines beyond GIM and BIM (Lee et al. 2012). Furthermore, Content Management Systems (non-structured data, e.g. documents, arbitrary texts, graphics) and multimedia databases (imagery/video/mass data etc.), are well suited to manage the context of geospatial data. Finally, in-memory databases (running in the RAM of the computer, Continuous Query Language), data stream management systems (DSMS) (streams of sensor data, video, audio), and array databases (Baumann 1994) are seeing a revival (Baumann et al. 2021) as there is an increasing need of providing analysis-ready data (Baumann et al. 2018).

4.2 Case Study and Research Perspectives

4.2.1 Case Study “Urban Digital Twins”

Based on the most recent ICT and its protocols a huge variety of 3D/4D and real-time data sources need to be integrated to model our environment. These data, collected by means of both, physical and social sensing, together with modern remote sensing technologies, define what is increasingly called the “digital twin” of a city, available for real-time geoinformation processing. Urban situations should be simulated and analyzed with highly sophisticated mathematical methods for different purposes. Huge amounts of different data need to be stored, maintained and processed in a distributed and parallelized manner. Decision makers, government and the public should be involved, which asks for advanced methods of information visualization and dissemination (see Sects. 7 and 8). It facilitates the integration of urban geodata for a variety of applications for smart cities and urban digital twins, including urban and landscape planning, smart energy, transport and mobility, infrastructure, and others. Li et al. (2020) integrate these research issues in an emerging real-time GIS platform for smart cities that is designed for the acquisition, storage, analysis, and visualization of geospatial data in real time (see Fig. 10). This real-time GIS needs to support high throughput and high-speed processing of large GIS data streams, being location-sensitive, of high temporal granularity, and being generated continuously from sensing devices that collectively comprise the IoT.

A smart city enabled by real-time GIS (adapted and extended from Li et al. 2020)

4.2.2 Research perspectives

Picking up the challenges introduced in the former sections, we now outline the consequences for geospatial information modeling and management in detail. Obviously, new challenges arise such as:

-

(i)

Modeling and management of big geospatial and spatio-temporal data from data-intensive sensors incl. a change from images to videos.

-

(ii)

Representations of buildings, cities, and infrastructures in different space and time scales.

-

(iii)

Modeling and management of full 3D spaces for GeoBIM, i.e. the integration of GIM and BIM.

-

(iv)

Real-time dynamic scenes and moving objects.

-

(v)

Topology in 2D, 3D, 4D, and nD space.

-

(vi)

Ontologies and semantics: Modeling spatial and semantic relations and interactions as well as knowledge about objects and scenes.

-

(vii)

Data quality and uncertainty modeling and maintenance in the whole GI processing chain.

-

(viii)

4As (anytime, anywhere, for anyone, and anything).

-

(ix)

Database support for big geospatial data analysis.

-

(x)

Artificial intelligence (AI) supporting spatial information modeling and management.

Challenge (i) opens “a new chapter of the book” in geoinformatics: Real-time and highly dynamic scenarios will arise in remote sensing and other geo-applications with a high demand to retrieve and to mine knowledge out of the objects and the scene in (near) real-time. New data stream management systems and array databases, respectively, should be used and adapted to the special requirements of spatio-temporal data streams.

The challenges (ii) to (v) indicate that future DBMS will have to be extended to enable the management of multi-scale, full 3D, and topologic objects in spatio-temporal database management systems (STDBMS) supporting discrete and continuous time. Full 3D space representations of objects will be also necessary for GeoBIM to combine the data management for GIM and BIM applications e.g. to integrate 3D geological underground models with 3D city models. Besides geometric data types representing point clouds, lines, surfaces, and solids, also topologic data types representing nodes, edges, meshes, and solids should be provided. This means that not only the x, y, z-coordinates of points, lines, and surfaces of solid objects will be represented and ready for export, but also the internal topology of the objects. Furthermore, the semantic properties of these objects can be attached to their geometries and topologies. The topologic representation will make it possible to distinguish between the interior, the boundary, and the exterior of the objects and to navigate through the topologic representations. Furthermore, topologic concepts are well suited to model the relationships between spatial entities such as neighborhoods and intersections. Also, the number of connected components and the number of their holes can be explicitly determined by topologic concepts such as Betty numbers. Concerning time, GIM will require to model not only discrete time (objects only represented in predefined time steps), but also continuous time to model geographic or geoscientific processes such as volcanic eruptions, landslides, or tsunamis, whereas in BIM discrete time steps will be sufficient e.g. to model the progress of a construction site. 4D data representations (3D space plus time) are mandatory to maintain the dynamics of features in the real world. The history and future of objects should be considered equally in future 3D information modeling and management. Doing so, historical data are helpful to model the future by extrapolating the past and simulating the future (Breunig et al. 2020). How important it will be to extend 3D city modeling for archiving the past and planning the future of cities has been portrayed impressively by Matthys et al. (2021).

Big geospatial information, especially the large amount of unstructured text data on the web, and the fast development of natural language processing methods enable new research directions in geospatial semantics (challenge vi). Six major areas are identified and discussed by Hu (2017, 2018), including semantic interoperability by developing ontologies, digital gazetteers as structured dictionaries for named places, geographic information retrieval, geospatial semantic web, linked data, place semantics, and cognitive geographic concepts. Elicitation approaches will involve a set of processes that aim at extracting latent knowledge from unstructured or semi-structured content. Future research will be dealing with semantic-based extraction, enrichment, search, and analysis of big geospatial data, places, regions, events, trajectories, and topics as well as geospatial concepts and relations (Kokla and Guilbert 2020).

GI research approaches will integrate imprecise geospatial data models (challenge vii) such as fuzzy models and rough sets and multiple representations (Virrauntas et al. 2009). Quality models should be able to propagate errors throughout the whole GI processing chain because data imperfections propagated through spatial analysis affect the decision-making process. Thus, error modeling and quality descriptions will be shifted from location uncertainties of geographic features and phenomena, towards fitness for use data evaluation, to uncertainty in decision making (Bielecka and Burek 2019).

Challenge (viii) refers to mobility: “With the integration of information and communication technologies (ICT), especially mobile ICT in every aspect of our daily lives, the 4As (anytime, anywhere, for anyone and anything) ‘services’ are being developed to benefit our human society and environment. This new generation of 4A technologies brings convenience and improves our quality of life, but also leads to surveillance, privacy, and ethical issues, unknown, and unimagined before” (Huang et al. 2018).

The massive use of geospatial raster data requires efficient database support for big geospatial data analysis (Challenge ix). Therefore, array databases (Baumann 1994) such as rasdaman (Baumann et al. 1998) will allow the provision of analysis-ready data as Data Cubes (Baumann et al. 2018; Baumann, 2018) or Earth Cubes (Mahecha et al. 2020).

Artificial intelligence (AI) methods will be included in DSMS, SDBMS, STDBMS, and array databases (challenge x) to support data analysis. Machine learning will likely influence geospatial information modeling and management in a revolutionary way: Future database platforms should be able to deal with the input, models, and output of machine learning approaches to simplify data preprocessing. Furthermore, AI may support data cleansing to detect and correct errors in big data and time series (Breunig et al. 2020). Examples are the tileless spatial and temporal selection of examination areas and the automatic detection of data interpretation errors such as the unwrapping error in Sentinel-1 SAR data (Mazroob Semnani et al. 2020). As a consequence, future scientists will be able to repeat their experiments as often as they like by just retrieving arbitrary spatial and spatio-temporal data from the STDBMS to check and compare the last runs of their experiments or of other researchers who carried out similar data analysis experiments. Combined data analysis and data management tools will thus increase the reproducibility of data analysis results significantly.

Furthermore, the use of parallel data management architectures (MapReduce-based systems) such as SpatialHadoop® (Eldawy and Mokbel 2015), ST-Hadoop® (Alarabi et al. 2018), and Hadoop-GIS® (Aji et al. 2013) and new developments will support fast query processing. New directions in geospatial data management focusing on the interface between different fields of research such as interfacing data management and visualization, data management and data analysis, etc. have been discussed by Breunig et al. (2020).

5 Geospatial Information Integration

Geospatial information integration faces a set of challenges related to the high variety of available data in the geospatial domain. While it has similarities with general data integration, data augmentation, and data cleaning, it differs a lot as different conceptualizations and resolutions lead to fundamentally different representations of the same thing in reality. This can lead to situations, where spatial data integration is simpler as opposed to general data integration: When two different objects are in a very similar location, they likely have a strong relationship and the existence of the relationship can be inferred just from geometry and topology. However, fully correct and plausible representations of the same object can be significantly different in terms of geometry: do we identify a house with its perimeter or its cadastral polygon or maybe just with the entrance of the property? Does the garage belong to the house or is it a separate object?

5.1 State of the Art

Data, models, information, and knowledge are scattered across different communities and disciplines, causing severe limitations to current geosciences research (Gil et al. 2019). Geospatial data is captured for different purposes, with different sensors, based on different data models. This leads to a huge variability of available data sets. This variety not only concerns the semantic contents of the data but also to data modeling, formats and representation. As data sets often relate to the same physical reality—or the same spatial and temporal extent of the reality—there is a need for data and information integration to fully exploit the richness of available data. Examples are topographic data sets and special branch data, e.g. ATKIS and GDF; GML and BIM formats, e.g. CityGML and IFC; structured data sets and raw data, e.g. CityGML buildings and 3D building surface models from point clouds.

The goal of data integration is to

-

provide a consistent view of a set of datasets from different regimes, schemata, and conceptualizations,

-

allow an integrated analysis of data from different sources and

-

enrich data sets with information from another one.

Data integration is typically tackled on different levels and with different mechanisms:

-

Syntactic integration ensures that comparable data formats are used so that the data can be represented in the same system and e.g. visually overlaid. Such a process can be dealt with using standards (e.g. OGC simple feature specification, CityGML, GeoTIFF).

-

Semantic integration relates to the contents of the data and the meaning and aims at identifying corresponding object categories in both data sets. This process presumes that those correspondences are revealed and made explicit. Often, ontologies are used to describe the meaning of objects. An ontology is a shared conceptualization of objects and relations of a specific knowledge domain (Gruber 1995). This means that it is typically a negotiation process among different stakeholders to define and describe what constitutes an object.

-

Instance-based integration: Given two datasets with different representations of possibly identical objects, the construction of correspondence of objects in terms of relations like “identity” or “is-part-of” can be used to derive a joint object catalog combining information from both datasets. In geospatial data, such correspondences are typically derived using semantic and geometric matching, i.e. taking similar object characteristics and similar form, shape, and location into account.

-

Latent Space Integration: Given a family of independent datasets, learn representations of each of the datasets like a feature transformation (Bengio et al. 2013) and align the resulting feature spaces into a joint space in which the joint geometry captures similarity.

Due to these very different schemes of data integration, the results of the integration are manifold: one option is to derive a consistent, homogeneous object catalog, which integrates and consolidates the properties and attributes of the objects it was derived from. This includes steps such as entity resolution, entity matching, geometric fusion—e.g. deriving an intermediate geometry—and harmonization of the attributes. As all of these steps can be challenging, a fundamentally different approach is to first propagate all the objects from the data sources into a large set, maybe adding the source dataset to the name, or otherwise making possibly identical objects from different datasets to different objects. In this intermediate representation, semantic links between objects are computed via data mining. A good introduction on this topic is given by Getoor and Diehl (2005). These links can be semantic relations such as neighboring, “is-part-of” or “belongs to”. In addition, the “identity” can be mined as a relation “is-same-as”. Furthermore, the certainty of such links can be assigned as a property to the link such that clear relations (e.g., “is-part-of” if the geometric footprints overlap) can be distinguished from weak relations (e.g., “is-part-of” with a weight of 0.5 for two different objects, because the geometry intersects both objects). While this approach avoids errors introduced by conflating two datasets into a new one forcing all information to be merged, it introduces a much more complicated topologic data space as the result of the integration, and it becomes more difficult to implement applications on top of such a data space.

The semantic web aims at making the links and relations between data explicit by defining resource description framework (RDF) triples; the field also provides tooling and query languages for the resulting data spaces. However, it is still an area under development and further research is needed to fully unlock the potential of these approaches, especially under the uncertainty inherent to spatial data objects.

In general, data integration in the spatial community is often a process in which at least input and output datasets have a spatial interpretation. An important exception to this rule is the case of latent space methods in which an artificial space (geometric space, topologic space, or differently structured data space) is generated in which a certain problem (e.g., object detection, change detection) can be solved although the original geospatial attributes are (partly) lost in this step. In many such cases, this loss of spatialness as part of the method is compensated by an additional data integration step with the original data. State-of-the-art computer vision algorithms for object detection, like Yolo, are a good illustration of this principle. In these algorithms, the spatial space (e.g., the image) is decomposed into many small patches which are individually processed. For each of those patches, without the location information in the original image, the system assigns a probability that the patch is part of an object to be detected. Then, these partial results are recombined into a spatial object representation like a bounding box. In this case, spatial information is removed in the splitting phase to small patches, and the spatial nature is reintroduced when combining neighboring patches to bounding boxes after classification in a non-spatial image classification setting.

5.2 Case Studies

5.2.1 Case Study “Data-driven schema matching”

An approach to achieve semantic integration is proposed by Kieler (2020). It is a data-driven approach to determine correspondences between two ontologies and starts from the assumption that objects which share the same geometry and spatial footprint also have something in common on a semantic level (see Fig. 11). An example is a forest, which is called „A4107 (Wald, Forst)“ in ATKIS and “G7120 (Land Cover: Forest (Woodland))” in GDF, and which in both data sets is represented at the same location and with a similar geometric delineation. If there are many examples of such correspondences, then it is fair to infer that “A4107” and “G7120” belong to the same semantic class. Based on this principle, Kieler developed an optimization approach using integer programming to determine the best correspondences between two data sets. The challenges are that typically the correspondences between objects are not unique (i.e. not 1:1 but 1:n or n:m) and there are geometric inaccuracies. In addition, when objects are represented in different scales, the identification of correspondences has to be refined and has to go beyond geometric matching, taking the changes into account that result from generalization.

A survey of approaches to automatic schema matching (Kieler 2020)

5.2.2 Case Study “Data Enrichment Based on Information Fusion”

Crowdsourcing based on ubiquitous sensors has a huge potential for collecting dynamic environmental information. An approach exploiting smartphone sensors mounted on bicycles to determine road roughness is described by Wage and Sester (2021). The approach is based on the intuitive principle, that roughness can be measured using the acceleration sensors of a smartphone. In this way, the roughness of a road segment is observed by different bicycles.