Abstract

The standard model (SM) production of four top quarks (\(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) in proton–proton collisions is studied by the CMS Collaboration. The data sample, collected during the 2016–2018 data taking of the LHC, corresponds to an integrated luminosity of 137\(\,\text {fb}^{-1}\) at a center-of-mass energy of 13\(\,\text {TeV}\). The events are required to contain two same-sign charged leptons (electrons or muons) or at least three leptons, and jets. The observed and expected significances for the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal are respectively 2.6 and 2.7 standard deviations, and the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section is measured to be \(12.6^{+5.8}_{-5.2}\,\text {fb} \). The results are used to constrain the Yukawa coupling of the top quark to the Higgs boson, \(y_{\text {t}}\), yielding a limit of \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} | < 1.7\) at \(95\%\) confidence level, where \(y_{\text {t}}^{\mathrm {SM}}\) is the SM value of \(y_{\text {t}}\). They are also used to constrain the oblique parameter of the Higgs boson in an effective field theory framework, \(\hat{H}<0.12\). Limits are set on the production of a heavy scalar or pseudoscalar boson in Type-II two-Higgs-doublet and simplified dark matter models, with exclusion limits reaching 350–470\(\,\text {GeV}\) and 350–550\(\,\text {GeV}\) for scalar and pseudoscalar bosons, respectively. Upper bounds are also set on couplings of the top quark to new light particles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

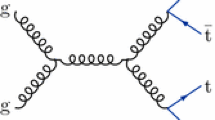

The production of four top quarks (\(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) is a rare standard model (SM) process, with a predicted cross section of \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} ) = 12.0^{+2.2}_{-2.5}\,\text {fb} \) in proton–proton (\(\hbox {pp} \)) collisions at a center-of-mass energy of 13\(\,\text {TeV}\), as calculated at next-to-leading-order (NLO) accuracy for both quantum chromodynamics and electroweak interactions [1]. Representative leading-order (LO) Feynman diagrams for SM production of \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) are shown in Fig. 1.

The \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section can be used to constrain the magnitude and CP properties of the Yukawa coupling of the top quark to the Higgs boson [2, 3]. Moreover, \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production can be significantly enhanced by beyond-the-SM (BSM) particles and interactions. New particles coupled to the top quark, such as heavy scalar and pseudoscalar bosons predicted in Type-II two-Higgs-doublet models (2HDM) [4,5,6] and by simplified models of dark matter (DM) [7, 8], can contribute to \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) when their masses are larger than twice the mass of the top quark, with diagrams similar to Fig. 1 (right). Additionally, less massive particles can enhance \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) via off-shell contributions [9]. In the model-independent framework of SM effective field theory, four-fermion couplings [10], as well as a modifier to the Higgs boson propagator [11], can be constrained through a measurement of \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)). Conversely, models with new particles with masses on the order of 1\(\,\text {TeV}\), such as gluino pair production in the framework of supersymmetry [12,13,14,15,16,17,18,19,20,21], are more effectively probed through studies of \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production in boosted events or by requiring very large imbalances in momentum.

Each top quark primarily decays to a bottom quark and a W boson, and each W boson decays to either leptons or quarks. As a result, the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) final state contains jets mainly from the hadronization of light (u, d, s, c) quarks (light-flavor jets) and b quarks (b jets), and can also contain isolated charged leptons and missing transverse momentum arising from emitted neutrinos. Final states with either two same-sign leptons or at least three leptons, considering \(\hbox {W} \rightarrow \ell \nu \) (\(\ell = \hbox {e} \) or \(\mu \)) and including leptonic decays of \(\tau \) leptons, correspond to a combined branching fraction of approximately 12% [22]. The relatively low levels of background make these channels the most sensitive to \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) events produced with SM-like kinematic properties [23].

Previous searches for \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production in 13\(\,\text {TeV}\)\,\(\hbox {pp} \) collisions were performed by the ATLAS [24, 25] and CMS [23, 26, 27] Collaborations. The most sensitive results, based on an integrated luminosity of approximately \(36{\,\text {fb}^{-1}} \) collected by each experiment, led to cross section measurements of \(28.5^{+12}_{-11}\,\text {fb} \) with an observed (expected) significance of 2.8 (1.0) standard deviations by ATLAS [25], and \(13^{+11}_{-9}\,\text {fb} \) with an observed (expected) significance of 1.4 (1.1) standard deviations by CMS [23], both consistent with the SM prediction.

The analysis described in this paper improves upon the CMS search presented in Ref. [27], and supersedes the results, by taking advantage of upgrades to the CMS detector and by optimizing the definitions of the signal regions for the integrated luminosity of 137\(\,\text {fb}^{-1}\). The reference cross section for SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \), \(12.0^{+2.2}_{-2.5}\,\text {fb} \), used to determine the expected statistical significance of the search, as well as in interpretations for which SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) is a background, includes NLO electroweak effects, in contrast to the \(9.2^{+2.9}_{-2.4}\,\text {fb} \) [28] used in the previous search. In addition to the analysis strategy used in the previous search, a new multivariate classifier is defined to maximize the sensitivity to the SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal.

2 Background and signal simulation

Monte Carlo (MC) simulated samples at NLO are used to evaluate the signal acceptance for the SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) process and to estimate the backgrounds from diboson (\(\hbox {WZ} \), \(\hbox {ZZ} \), \(\hbox {Z} \upgamma \), \(\hbox {W} ^{\pm }\hbox {W} ^{\pm }\)) and triboson (\(\hbox {WWW} \), \(\hbox {WWZ} \), \(\hbox {WZZ} \), \(\hbox {ZZZ} \), \(\hbox {WW} \upgamma \), \(\hbox {WZ} \upgamma \)) processes. Simulated samples generated at NLO are also used to estimate backgrounds from associated production of single top quarks and vector bosons (\(\text {tWZ} \), \(\text {tZq} \), \(\text {t} \upgamma \)), or \(\text {t} {}{\overline{\text {t}}} \) produced in association with a single boson (\(\text {t} {}{\overline{\text {t}}} \hbox {W} , \text {t} {}{\overline{\text {t}}} \hbox {Z} , \text {t} {}{\overline{\text {t}}} \text {H} , \text {t} {}{\overline{\text {t}}} \upgamma \)). Three separate sets of simulated events for each process are used in order to match the different data-taking conditions and algorithms used in 2016, 2017, and 2018. Most samples are generated using the MadGraph 5_amc@nlo 2.2.2 (2.4.2) program [28] at NLO for 2016 samples (2017 and 2018 samples) with at most two additional partons in the matrix element calculations. In particular, the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) sample is generated with up to one additional parton, and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) and \(\text {t} {}{\overline{\text {t}}} \text {H} \) with no additional partons. The \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) sample, which includes \(\text {t} {}{\overline{\text {t}}} \hbox {Z} /\gamma ^{*}\rightarrow \ell \ell \), is generated with a dilepton invariant mass greater than \(1\,\text {GeV} \). For the \(\hbox {WZ} \) sample used with 2016 conditions, as well as all \(\hbox {ZZ} \) and \(\text {t} {}{\overline{\text {t}}} \text {H} \) samples, the powheg box v2 [29, 30] program is used. The MadGraph 5_amc@nlo generator at LO with up to three additional partons, scaled to NLO cross sections, is used to produce a subset of samples for some of the data taking periods: \(\hbox {W} \upgamma \) (2016), \(\text {t} {}{\overline{\text {t}}} \upgamma \) (2017 and 2018), \(\text {tZq} \) (2018), and \(\text {t} \upgamma \) (2018) [28]. Other rare backgrounds, such as \(\text {t} {}{\overline{\text {t}}} \) production in association with dibosons (\(\text {t} {}{\overline{\text {t}}} \hbox {WW} \), \(\text {t} {}{\overline{\text {t}}} \hbox {WZ} \), \(\text {t} {}{\overline{\text {t}}} \hbox {ZZ} \), \(\text {t} {}{\overline{\text {t}}} \hbox {WH} \), \(\text {t} {}{\overline{\text {t}}} \hbox {ZW} \), \(\text {t} {}{\overline{\text {t}}} \text {HH} \)) and triple top quark production (\(\text {t} {}{\overline{\text {t}}} \text {t} \), \(\text {t} {}{\overline{\text {t}}} \text {tW} \)), are generated using LO MadGraph 5_amc@nlo without additional partons, and scaled to NLO cross sections [31]. The background from radiative top decays, with \(\gamma ^{*}\rightarrow \ell \ell \), was found to be negligible in this analysis.

The top quark associated production modes for a heavy scalar (H) or pseudoscalar (A) in the mass range of [350, 650]\(\,\text {GeV}\), \(\text {t} {}{\overline{\text {t}}} \text {H}/\text {A} \), \(\text {tqH}/\text {A} \), and \(\text {tWH}/\text {A} \), with subsequent decays of \(\text {H}/\text {A} \) into a pair of top quarks, are generated using LO MadGraph 5_amc@nlo, with one additional parton for all but the \(\text {tqH}/\text {A} \) production mode. In the context of type-II 2HDM, these samples are scaled to LO cross sections obtained with MadGraph 5_amc@nlo model, “2HDMtII” [32, 33]. For the choice \(\tan \beta = 1\) in the alignment limit [34], where \(\tan \beta \) represents the ratio of vacuum expectation values of the two Higgs doublets, these cross sections reproduce those of Ref. [6], which were also used in the previous CMS result [27]. In the context of simplified models of dark matter, these samples are scaled to LO cross sections obtained with the model used in Ref. [35], which includes kinematically accessible decays of the mediator into a pair of top quarks. The processes are simulated in the narrow-width approximation, suitable for the parameter space studied here, in which the width of the mediator is 5% of its mass or less. Samples and cross sections used for constraining the modified Higgs boson propagator are generated using MadGraph 5_amc@nlo at LO, matching the prescription of Ref. [11]. Cross sections used for SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) enhanced by scalar and vector off-shell diagrams are obtained at LO from Ref. [9].

The NNPDF3.0LO (NNPDF3.0NLO) [36] parton distribution functions (PDFs) are used to generate all LO (NLO) 2016 samples, while NNPDF3.1 next-to-next-to-leading order [37] is used for 2017 and 2018 samples. Parton showering and hadronization, as well as \(\hbox {W} ^{\pm }\hbox {W} ^{\pm }\) production from double-parton scattering, are modeled by the pythia 8.205 [38] program for 2016 samples and pythia 8.230 [39] for 2017 and 2018 samples, while the MLM [40] and FxFx [41] prescriptions are employed in matching additional partons from the matrix element calculations to those from parton showers for the LO and NLO samples, respectively. The underlying event modeling uses the CUETP8M1 tune [42, 43] for 2016, and CP5 [44] for 2017 and 2018 data sets, respectively. The top quark mass in the Monte Carlo programs is set to \(172.5\,\text {GeV} \). The Geant4 package [45] is used to model the response of the CMS detector. Additional \(\hbox {pp} \) interactions (pileup) within the same or nearby bunch crossings are also included in the simulated events.

3 The CMS detector and event reconstruction

The central feature of the CMS detector is a superconducting solenoid of 6\(\,\text {m}\) internal diameter, providing a magnetic field of 3.8\(\,\text {T}\). Within the solenoid volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Forward calorimeters extend the pseudorapidity (\(\eta \)) coverage provided by the barrel and endcap detectors. Muons are detected in gas-ionization chambers embedded in the steel flux-return yoke outside the solenoid. A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant variables, can be found in Ref. [46].

Events of interest are selected using a two-tiered trigger system [47]. The first level, composed of custom hardware processors, uses information from the calorimeters and muon detectors to select events at a rate of around 100\(\,\text {kHz}\) within a time interval of less than 4 \(\upmu \)s. The second level, known as the high-level trigger, consists of a farm of processors running a version of the full event reconstruction software optimized for fast processing, and reduces the event rate to around 1\(\,\text {kHz}\) before data storage.

The reconstructed vertex with the largest value of summed physics-object squared-transverse-momentum is taken to be the primary \(\hbox {pp} \) interaction vertex. The physics objects are the jets, clustered using the jet finding algorithm [48, 49] with the tracks assigned to the vertex as inputs, and the associated missing transverse momentum, taken as the negative vector sum of the transverse momentum (\(p_{\mathrm {T}}\)) of those jets.

The particle-flow algorithm [50] aims to reconstruct and identify each individual particle in an event, with an optimized combination of information from the various elements of the CMS detector. The energy of photons is directly obtained from the ECAL measurement. The energy of electrons is determined from a combination of the electron momentum at the primary interaction vertex as determined by the tracker, the energy of the corresponding ECAL cluster, and the energy sum of all bremsstrahlung photons spatially compatible with the electron track [51]. The momentum of muons is obtained from the curvature of the corresponding track, combining information from the silicon tracker and the muon system [52]. The energy of charged hadrons is determined from a combination of their momentum measured in the tracker and the matching ECAL and HCAL energy deposits, corrected for the response function of the calorimeters to hadronic showers. The energy of neutral hadrons is obtained from the corresponding corrected ECAL and HCAL energies.

Hadronic jets are clustered from neutral PF candidates and charged PF candidates associated with the primary vertex, using the anti-\(k_{\mathrm {T}}\) algorithm [48, 49] with a distance parameter of 0.4. The jet momentum is determined as the vectorial sum of all PF candidate momenta in the jet. An offset correction is applied to jet energies to take into account the contribution from pileup [53]. Jet energy corrections are derived from simulation and are improved with in situ measurements of the energy balance in dijet, multijet, \(\gamma \)+jet, and leptonically decaying Z+jet events [54, 55]. Additional selection criteria are applied to each jet to remove jets potentially affected by instrumental effects or reconstruction failures [56]. Jets originating from b quarks are identified as b-tagged jets using a deep neural network algorithm, DeepCSV [57], with a working point chosen such that the efficiency to identify a b jet is 55–70% for a jet \(p_{\mathrm {T}}\) between 20 and 400\(\,\text {GeV}\). The misidentification rate is approximately 1–2% for light-flavor and gluon jets and 10–15% for charm jets, in the same jet \(p_{\mathrm {T}}\) range. The vector \(\vec {p}_{\mathrm {T}}^{\text {miss}}\) is defined as the projection on the plane perpendicular to the beams of the negative vector sum of the momenta of all reconstructed PF candidates in an event [58]. Its magnitude, called missing transverse momentum, is referred to as \(p_{\mathrm {T}} ^\text {miss}\).

4 Event selection and search strategy

The identification, isolation, and impact parameter requirement with respect to the primary vertex, imposed on electrons and muons are the same as those of Ref. [27] when analyzing the 2016 data set, while for the 2017 and 2018 data sets the identification of electrons and the isolation of both electrons and muons are modified to take into account the increased pileup. For electrons, identification is based on a multivariate discriminant using shower shape and track quality variables, while muon identification is based on the quality of the geometrical matching between measurements in the tracker and the muon system. The isolation requirement, introduced in Ref. [59], is designed to distinguish the charged leptons produced in W and Z decays (“prompt leptons”) from the leptons produced in hadron decays or in conversions of photons in jets, as well as hadrons misidentified as leptons (collectively defined as “nonprompt leptons”). The requirements to minimize charge misassignment are the same as in Ref. [27]: muon tracks are required to have a small uncertainty in \(p_{\mathrm {T}}\) and electron tracks are required to have the same charge as that obtained from comparing a linear projection of the pixel detector hits to the position of the calorimeter deposit. The combined efficiency to reconstruct and identify leptons is in the range of 45–80 (70–90)% for electrons (muons), increasing as a function of \(p_{\mathrm {T}}\) and reaching the maximum value for \(p_{\mathrm {T}} >60\,\text {GeV} \).

For the purpose of counting leptons and jets, the following requirements are applied: the number of leptons (\(N_\ell \)) is defined to be the multiplicity of electrons and muons with \(p_{\mathrm {T}} > 20\,\text {GeV} \) and either \(|\eta | < 2.5\) (electrons) or \(|\eta | < 2.4\) (muons), the number of jets (\(N_\text {jets}\)) counts all jets with \(p_{\mathrm {T}} > 40\,\text {GeV} \) and \(|\eta | < 2.4\), and the number of b-tagged jets (\(N_\text {b} \)) counts b-tagged jets with \(p_{\mathrm {T}} > 25\,\text {GeV} \) and \(|\eta | < 2.4\). In order to be included in \(N_\text {jets}\), \(N_\text {b} \), and the \(H_{\mathrm {T}}\) variable, which is defined as the scalar \(p_{\mathrm {T}}\) sum of all jets in an event, jets and b-tagged jets must have an angular separation \(\varDelta R > 0.4\) with respect to all selected leptons. This angular separation is defined as \(\varDelta R = \sqrt{\smash [b]{(\varDelta \eta )^2+(\varDelta \phi )^2}}\), where \(\varDelta \eta \) and \(\varDelta \phi \) are the differences in pseudorapidity and azimuthal angle, respectively, between the directions of the lepton and the jet.

Events were recorded using either a dilepton\(+\) \(H_{\mathrm {T}}\) (2016) or a set of dilepton triggers (2017 and 2018). The dilepton\(+\) \(H_{\mathrm {T}}\) trigger requires two leptons with \(p_{\mathrm {T}} > 8\,\text {GeV} \) and a minimum \(H_{\mathrm {T}} \) requirement that is fully efficient with respect to the offline requirement of \(300\,\text {GeV} \). The dilepton triggers require either two muons with \(p_{\mathrm {T}} > 17\) and \(8\,\text {GeV} \), two electrons with \(p_{\mathrm {T}} > 23\) and \(12\,\text {GeV} \), or an \(\hbox {e} {\upmu } \) pair with \(p_{\mathrm {T}} > 23\,\text {GeV} \) for the higher-\(p_{\mathrm {T}} \) (leading) lepton and \(p_{\mathrm {T}} > 12\ (8)\,\text {GeV} \) for the lower-\(p_{\mathrm {T}} \) (trailing) electron (muon). The trigger efficiency within the detector acceptance is measured in data to be greater than \(90\%\) for \(\hbox {ee} \), \(\hbox {e} {\upmu } \), and \({\upmu } {\upmu } \) events, and nearly \(100\%\) for events with at least three leptons.

We define a baseline selection that requires \(H_{\mathrm {T}} > 300\,\text {GeV} \) and \(p_{\mathrm {T}} ^\text {miss} >50\,\text {GeV} \), two or more jets (\(N_\text {jets} \ge 2\)) and b-tagged jets (\(N_\text {b} \ge 2\)), a leading lepton with \(p_{\mathrm {T}} > 25\,\text {GeV} \), and a trailing lepton of the same charge with \(p_{\mathrm {T}} > 20\,\text {GeV} \). Events with same-sign electron pairs with an invariant mass below 12\(\,\text {GeV}\) are rejected to reduce the background from production of low-mass resonances with a charge-misidentified electron. Events where a third lepton with \(p_{\mathrm {T}} >7\) (5)\(\,\text {GeV}\) for electrons (muons) forms an opposite-sign (OS) same-flavor pair with an invariant mass below 12\(\,\text {GeV}\) or between 76 and 106\(\,\text {GeV}\) are also rejected. Inverting this resonance veto, the latter events are used to populate a \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) background control region (CRZ) if the invariant mass is between 76 and 106\(\,\text {GeV}\) and the third lepton has \(p_{\mathrm {T}} > 20\,\text {GeV} \). After this baseline selection, the signal acceptance is approximately 1.5%, including branching fractions.

Events passing the baseline selection are split into several signal and control regions, following two independent approaches. In the first analysis, similarly to Ref. [27] and referred to as “cut-based”, the variables \(N_\text {jets}\), \(N_\text {b} \), and \(N_\ell \) are used to subdivide events into 14 mutually exclusive signal regions (SRs) and a control region (CR) enriched in \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) background (CRW), to complement the CRZ defined above, as detailed in Table 1. In the boosted decision tree (BDT) analysis, the CRZ is the only control region, and the remaining events are subdivided into 17 SRs by discretizing the discriminant output of a BDT trained to separate \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) events from the sum of the SM backgrounds.

The BDT classifier utilizes a gradient boosting algorithm to train 500 trees with a depth of 4 using simulation, and is based on the following 19 variables: \(N_\text {jets}\), \(N_\text {b} \), \(N_\ell \), \(p_{\mathrm {T}} ^\text {miss}\), \(H_{\mathrm {T}}\), two alternative definitions of \(N_\text {b} \) based on b tagging working points tighter or looser than the default one, the scalar \(p_{\mathrm {T}}\) sum of b-tagged jets, the \(p_{\mathrm {T}}\) of the three leading leptons, of the leading jet and of the sixth, seventh, and eighth jets, the azimuthal angle between the two leading leptons, the invariant mass formed by the leading lepton and the leading jet, the charge of the leading lepton, and the highest ratio of the jet mass to the jet \(p_{\mathrm {T}}\) in the event (to provide sensitivity to boosted, hadronically-decaying top quarks and W bosons). Three of the most performant input variables, \(N_\text {jets}\), \(N_\text {b} \), and \(N_\ell \), correspond to the variables used for the cut-based analysis. Top quark tagging algorithms to identify hadronically decaying top quarks based on invariant masses of jet combinations, similarly to Ref. [23], were also tested, but did not improve the expected sensitivity. Such algorithms could only contribute in the handful of events where all the top quark decay products were found, and these events already have very small background yields. In each analysis, the observed and predicted yields in the CRs and SRs are used in a maximum likelihood fit with nuisance parameters to measure \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)), following the procedure described in Sect. 7.

5 Backgrounds

In addition to the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal, several other SM processes result in final states with same-sign dileptons or at least three leptons, and several jets and b jets. These backgrounds primarily consist of processes where \(\text {t} {}{\overline{\text {t}}} \) is produced in association with additional bosons that decay to leptons, such as \(\text {t} {}{\overline{\text {t}}} \hbox {W} \), \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \), and \(\text {t} {}{\overline{\text {t}}} \text {H} \) (mainly in the \(\text {H} \rightarrow \hbox {WW} \) channel), as well as dilepton \(\text {t} {}{\overline{\text {t}}} \) events with a charge-misidentified prompt-lepton and single-lepton \(\text {t} {}{\overline{\text {t}}} \) events with an additional nonprompt lepton.

The prompt-lepton backgrounds, dominated by \(\text {t} {}{\overline{\text {t}}} \hbox {W} \), \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \), and \(\text {t} {}{\overline{\text {t}}} \text {H} \), are estimated using simulated events. Dedicated CRs are used to constrain the normalization for \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) (cut-based analysis) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) (cut-based and BDT analyses), while for other processes described in the next paragraph, the normalization is based on the NLO cross sections referenced in Sect. 2.

Processes with minor contributions are grouped into three categories. The “\(\text {t} {}{\overline{\text {t}}} \hbox {VV} \)” category includes the associated production of \(\text {t} {}{\overline{\text {t}}} \) with a pair of bosons (\(\hbox {W} \), \(\hbox {Z} \), \(\text {H} \)), dominated by \(\text {t} {}{\overline{\text {t}}} \hbox {WW} \). The “X\(\upgamma \) ” category includes processes where a photon accompanies a vector boson, a top quark, or a top-antitop quark pair. The photon undergoes a conversion, resulting in the identification of an electron in the final state. The category is dominated by \(\text {t} {}{\overline{\text {t}}} \upgamma \), with smaller contributions from \(\hbox {W} \upgamma \), \(\hbox {Z} \upgamma \), and \(\text {t} \upgamma \). Finally, the “Rare” category includes all residual processes with top quarks (\(\text {tZq} \), \(\text {tWZ} \), \(\text {t} {}{\overline{\text {t}}} \text {t} \), and \(\text {t} {}{\overline{\text {t}}} \text {tW} \)) or without them (\(\hbox {WZ} \), \(\hbox {ZZ} \), \(\hbox {W} ^{\pm }\hbox {W} ^{\pm }\) from single- and double-parton scattering, and triboson production).

Since the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \), \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \), and \(\text {t} {}{\overline{\text {t}}} \text {H} \) processes constitute the largest backgrounds to \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production, their simulated samples are corrected wherever possible to account for discrepancies observed between data and MC simulation. To improve the MC modeling of the additional jet multiplicity from initial-state radiation (ISR) and final-state radiation (FSR), simulated \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) events are reweighted based on the number of ISR or FSR jets (\(N_{\text {jets}}^{\mathrm {ISR/FSR}}\)). The reweighting is based on a comparison of the light-flavor jet multiplicity in dilepton \(\text {t} {}{\overline{\text {t}}} \) events in data and simulation, where the simulation is performed with the same generator settings as those of the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) samples. The method requires exactly two jets identified as originating from b quarks in the event and assumes that all other jets are from ISR or FSR. The \(N_{\text {jets}}^{\mathrm {ISR/FSR}}\) reweighting factors vary within the range of [0.77, 1.46] for \(N_{\text {jets}}^{\mathrm {ISR/FSR}}\) between 1 and 4. This correction is not applied to \(\text {t} {}{\overline{\text {t}}} \text {H} \) (\(\text {H} \rightarrow \hbox {WW} \)) events, which already have additional jets from the decay of the additional \(\hbox {W} \) bosons. In addition to the ISR or FSR correction, the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \), \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \), and \(\text {t} {}{\overline{\text {t}}} \text {H} \) simulation is corrected to improve the modeling of the flavor of additional jets, based on the measured ratio of the \(\text {t} {}{\overline{\text {t}}} \,\hbox {b}{\bar{\text {b}}}\) and \(\text {t} {}{\overline{\text {t}}} \,\text {jj}\) cross sections, \(1.7\pm 0.6\) , reported in Ref. [60], where j represents a generic jet. This correction results in a 70% increase of events produced in association with a pair of additional b jets. Other topologies, such as those including c quarks, are negligible by comparison, and no dedicated correction is performed.

The nonprompt lepton backgrounds are estimated using the “tight-to-loose” ratio method [59]. The tight identification (for electrons) and isolation (for both electrons and muons) requirements of the SRs are relaxed to define a loose lepton selection, enriched in nonprompt leptons. The efficiency, \(\epsilon _{\mathrm {TL}}\), for nonprompt leptons that satisfy the loose selection to also satisfy the tight selection is measured in a control sample of single-lepton events, as a function of lepton flavor, \(p_{\mathrm {T}}\), and \(|\eta |\), after subtracting the prompt-lepton contamination based on simulation. The loose selection is chosen to ensure that \(\epsilon _{\mathrm {TL}}\) remains stable across the main categories of nonprompt leptons specified in Sect. 4, allowing the same \(\epsilon _{\mathrm {TL}}\) to be applied to samples with different nonprompt lepton composition. For leptons failing the tight selection, the \(p_{\mathrm {T}}\) variable is redefined as the sum of the lepton \(p_{\mathrm {T}}\) and the energy in the isolation cone exceeding the isolation threshold value. This parametrization accounts for the momentum spectrum of the parent parton (the parton that produced the nonprompt lepton), allowing the same \(\epsilon _{\mathrm {TL}}\) to be applied to samples with different parent parton momenta with reduced bias. To estimate the number of nonprompt leptons in each SR, a dedicated set of application regions is defined, requiring at least one lepton to fail the tight selection while satisfying the loose one (loose-not-tight). Events in these regions are then weighted by a factor of \(\epsilon _{\mathrm {TL}} / (1-\epsilon _{\mathrm {TL}})\) for each loose-not-tight lepton. To avoid double counting the contribution of events with multiple nonprompt leptons, events with two loose-not-tight leptons are subtracted, and the resulting total weight is used as a prediction of the nonprompt lepton yield.

The background resulting from charge-misidentified leptons is estimated using the charge-misidentification probability measured in simulation as a function of electron \(p_{\mathrm {T}}\) and \(|\eta |\). This probability ranges between \(10^{-5}\) and \(10^{-3}\) for electrons and is at least an order of magnitude smaller for muons. Charge-misidentified muons are therefore considered negligible, while for electrons this probability is applied to a CR of OS dilepton events defined for each same-sign dilepton SR. A single correction factor, inclusive in \(p_{\mathrm {T}}\) and \(|\eta |\), is applied to the resulting estimate to account for differences between data and simulation in this probability. A correction factor, derived from a control sample enriched in \(\hbox {Z} \rightarrow {{\hbox {e}}^{+}} {{\hbox {e}}^{-}} \) events with one electron or positron having a misidentified charge, is very close to unity for the 2016 simulation, while it is approximately 1.4 for the 2017 and 2018 simulation. Even with the larger correction factors, the charge-misidentification probability is smaller in 2017 and 2018 than in 2016, due to the upgraded pixel detector [61].

6 Uncertainties

Several sources of experimental and theoretical uncertainty related to signal and background processes are considered in this analysis. They are summarized, along with their estimated correlation treatment across the 2016, 2017, and 2018 data sets, in Table 2. Most sources of uncertainties affect simulated samples, while the backgrounds obtained using control samples in data (charge-misidentified and nonprompt leptons) have individual uncertainties described at the end of this section.

The uncertainties in the integrated luminosity are 2.5, 2.3, and 2.5% for the 2016, 2017, and 2018 data collection periods, respectively [62,63,64]. Simulated events are reweighted to match the distribution of the number of pileup collisions per event in data. This distribution is derived from the instantaneous luminosity and the inelastic cross section [65], and uncertainties in the latter are propagated to the final yields, resulting in yield variations of at most 5%.

The efficiency of the trigger requirements is measured in an independent data sample selected using single-lepton triggers, with an uncertainty of 2%. The lepton reconstruction and identification efficiency is measured using a data sample enriched in \(\hbox {Z} \rightarrow \ell \ell \) events [51, 52], with uncertainties of up to 5 (3)% per electron (muon). The tagging efficiencies for b jets and light-flavor jets are measured in dedicated data samples [57], and their uncertainties result in variations between 1 and 15% of the signal region yields. In all cases, simulated events are reweighted to match the efficiencies measured in data. The uncertainty associated with jet energy corrections results in yield variations of 1–15% across SRs. Uncertainties in the jet energy resolution result in 1–10% variations [54].

As discussed in Sect. 5, we correct the distribution of the number of additional jets in \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) samples, with reweighting factors varying within the range of [0.77, 1.46]. We take one half of the differences from unity as the systematic uncertainties in these factors, since they are measured in a \(\text {t} {}{\overline{\text {t}}} \) sample, but are applied to different processes. These uncertainties result in yield variations up to 8% across SRs. Similarly, events with additional b quarks in \(\text {t} {}{\overline{\text {t}}} \hbox {W} \), \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \), and \(\text {t} {}{\overline{\text {t}}} \text {H} \) are scaled by a factor of \(1.7\pm 0.6\), based on the CMS measurement of the ratio of cross sections \(\sigma ({\text {t} {}{\overline{\text {t}}} \,\hbox {b}{\bar{\text {b}}}})/\sigma ({\text {t} {}{\overline{\text {t}}} \,\mathrm {jj}})\) [60]. The resulting uncertainty in the yields for SRs with \(N_\text {b} \ge 4\), where the effect is dominant, is up to 15%.

For background processes, uncertainties in the normalization (number of events passing the baseline selection) and shape (distribution of events across SRs) are considered, while for signal processes, the normalization is unconstrained, and instead, we consider the uncertainty in the acceptance (fraction of events passing the baseline selection) and shape. For each of the Rare, X\(\upgamma \), and \(\text {t} {}{\overline{\text {t}}} \hbox {VV} \) categories, normalization uncertainties are taken from the largest theoretical cross section uncertainty in any constituent physics process, resulting in uncertainties of 20%, 11%, and 11%, respectively. For the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) processes, we set an initial normalization uncertainty of 40%, but then allow the maximum-likelihood fit to constrain these backgrounds further using control samples in data. For \(\text {t} {}{\overline{\text {t}}} \text {H} \), we assign a 25% normalization uncertainty to reflect the signal strength, which is the ratio between the measured cross section of \(\text {t} {}{\overline{\text {t}}} \text {H} \) and its SM expectation, of \(1.26^{+0.31}_{-0.26}\) measured by CMS [66].

The shape uncertainty resulting from variations of the renormalization and factorization scales in the event generators is smaller than 15% for backgrounds, and 10% for the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) and 2HDM signals, while the effect of the PDFs is only 1%. For the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) and 2HDM signals, the uncertainty in the acceptance from variations of the scales is 2%. The uncertainty in the scales that determine ISR and FSR, derived from \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) samples, results in up to 6 and 10% uncertainties in signal acceptance and shape, respectively. When considering \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) as a background in BSM interpretations, a cross section uncertainty of 20% (based on the prediction of \(12.0^{+2.2}_{-2.5}\,\text {fb} \) [1]) is additionally applied to the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) process.

The charge-misidentified and nonprompt-lepton backgrounds are assigned an uncertainty of 20 and 30%, respectively, where the latter is increased to 60% for nonprompt electrons with \(p_{\mathrm {T}} > 50\,\text {GeV} \). For the charge-misidentified lepton background, the uncertainty is based on the agreement observed between the prediction and data as a function of kinematic distributions, in a data sample enriched in \(\hbox {Z} \rightarrow {{\hbox {e}}^{+}} {{\hbox {e}}^{-}} \) events with one electron or positron having a misidentified charge. For the nonprompt-lepton background, the uncertainty is based on the agreement observed in simulation closure tests of the “tight-to-loose” method using multijet, \(\text {t} {}{\overline{\text {t}}} \), and W \(+\!\) jets samples. The contamination of prompt leptons, which is subtracted based on simulation, is below 1% in the application region, but it can be significant in the control sample where \(\epsilon _{\mathrm {TL}}\) is measured, resulting in an uncertainty up to 50% in \(\epsilon _{\mathrm {TL}}\). The statistical uncertainty in the estimate based on control samples in data is taken into account for both backgrounds. It is negligible for the charge-misidentified lepton background, while for the nonprompt-lepton background it can be comparable or larger than the systematic uncertainty.

Experimental uncertainties in normalization and shape are treated as fully correlated among the SRs for all signal and background processes. Two choices of correlation across years (uncorrelated or fully correlated) were tested for each experimental uncertainty, and their impact on the measurement of \(\sigma (\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} )\) was found to be smaller than 1%. For simplicity, these uncertainties are then treated as uncorrelated. Systematic uncertainties in the background estimates based on control samples in data and theoretical uncertainties in the normalization of each background process are treated as uncorrelated between processes but fully correlated among the SRs and across the 3 years. Scale and PDF uncertainties, as well as uncertainties in the number of additional b quarks, are correlated between processes, signal regions, and years. Statistical uncertainties due to the finite number of simulated events or control region events are considered uncorrelated.

7 Results

Distributions of the main kinematic variables (\(N_\text {jets}\), \(N_\text {b} \), \(H_{\mathrm {T}}\), and \(p_{\mathrm {T}} ^\text {miss}\)) for events in the baseline region, as defined in Sect. 4, are shown in Fig. 2 and compared to the SM background predictions. The \(N_\text {jets}\) and \(N_\text {b} \) distributions for the CRW and CRZ are shown in Fig. 3. The expected SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal, normalized to its predicted cross section, is shown in both figures. The SM predictions are statistically consistent with the observations.

Distributions of \(N_\text {jets}\) (upper left), \(N_\text {b} \) (upper right), \(H_{\mathrm {T}}\) (lower left), and \(p_{\mathrm {T}} ^\text {miss}\) (lower right) in the summed SRs (1–14), before fitting to data, where the last bins include the overflows. The hatched areas represent the total uncertainties in the SM signal and background\,predictions. The \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal assumes the SM cross section from Ref. [1]. The lower panels show the ratios of the observed event yield to the total prediction of signal plus background

Distributions of \(N_\text {jets}\) (left) and \(N_\text {b} \) (right) in the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) (upper) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) (lower) CRs, before fitting to data. The hatched areas represent the uncertainties in the SM signal and background predictions. The \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal assumes the SM cross section from Ref. [1]. The lower panels show the ratios of the observed event yield to the total prediction of signal plus background

A binned likelihood is constructed using the yields from the signal regions, the CRZ, as well as the CRW for the cut-based analysis only, incorporating the experimental and theoretical uncertainties described in Sect. 6 as “nuisance” parameters. The measured cross section for \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) and the significance of the observation relative to the background-only hypothesis are obtained from a profile maximum-likelihood fit, in which the parameter of interest is \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) and all nuisance parameters are profiled, following the procedures described in Refs. [22, 67]. In addition, an upper limit at 95% confidence level (\(\text {CL}\)) is set on \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) using the modified frequentist \(\text {CL}_\text {s}\) criterion [68, 69], with the profile likelihood ratio test statistic and asymptotic approximation [70]. We verified the consistency between the asymptotic and fully toy-based methods. Alternatively, by considering the SM, including the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) process with the SM cross section and uncertainty [1], as the null hypothesis, the fit provides cross section upper limits on BSM processes with new scalar and pseudoscalar particles, as discussed in Sect. 8.

The values and uncertainties of most nuisance parameters are unchanged by the fit, but the ones significantly affected include those corresponding to the \(\text {t} {}{\overline{\text {t}}} \hbox {W} \) and \(\text {t} {}{\overline{\text {t}}} \hbox {Z} \) normalizations, which are both scaled by \(1.3\pm 0.2\) by the fit, in agreement with the ATLAS and CMS measurements of these processes [71,72,73]. The predicted yields after the maximum-likelihood fit (post-fit) are compared to data in Fig. 4 for the cut-based (upper) and BDT (lower) analyses, where the fitted \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal contribution is added to the background predictions. The corresponding yields are shown in Tables 3 and 4 for the cut-based and BDT analysis, respectively.

The \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section and the 68% \(\text {CL}\) interval is measured to be \(9.4^{+6.2}_{-5.6}\,\text {fb} \) in the cut-based analysis, and \(12.6^{+5.8}_{-5.2}\,\text {fb} \) in the BDT analysis. Relative to the background-only hypothesis, the observed and expected significances are 1.7 and 2.5 standard deviations, respectively, for the cut-based analysis, and 2.6 and 2.7 standard deviations for the BDT analysis. The observed 95% \(\text {CL}\) upper limits on the cross section are \(20.0\,\text {fb} \) in the cut-based and \(22.5\,\text {fb} \) in the BDT analyses. The corresponding expected upper limits on the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section, assuming no SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) contribution to the data, are \(9.4^{+4.3}_{-2.9}\,\text {fb} \) (cut-based) and \(8.5^{+3.9}_{-2.6}\,\text {fb} \) (BDT), a significant improvement relative to the value of \(20.8^{+11.2}_{-6.9}\,\text {fb} \) of Ref. [27]. The BDT and cut-based observed results were found to be statistically compatible by using correlated toy pseudo-data sets. We consider the BDT analysis as the primary result of this paper, as it provides a higher expected measurement precision, and use the results from it for further interpretations in the following section.

Observed yields in the control and signal regions for the cut-based (upper) and BDT (lower) analyses, compared to the post-fit predictions for signal and background processes. The hatched areas represent the total post-fit uncertainties in the signal and background predictions. The lower panels show the ratios of the observed event yield to the total prediction of signal plus background

8 Interpretations

This analysis is used to constrain SM parameters, as well as production of BSM particles and operators that can affect the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production rate. The existence of \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) Feynman diagrams with virtual Higgs bosons allows interpreting the upper limit on \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) as a constraint on the Yukawa coupling, \(y_{\text {t}}\), between the top quark and the Higgs boson [2, 3]. Similarly, the measurement can be interpreted as a constraint on the Higgs boson oblique parameter \(\hat{H}\), defined as the Wilson coefficient of the dimension-six BSM operator modifying the Higgs boson propagator [11]. More generically, Feynman diagrams where the virtual Higgs boson is replaced by a virtual BSM scalar (\(\phi \)) or vector (\({\hbox {Z}}^{\prime } \)) particle with mass smaller than twice the top quark mass (\(m < 2m_\text {t} \)), are used to interpret the result as a constraint on the couplings of such new particles [9]. In addition, new particles with \(m > 2m_\text {t} \), such as a heavy scalar (H) or pseudoscalar (A), can be produced on-shell in association with top quarks. They can subsequently decay into top quark pairs, generating final states with three or four top quarks. Constraints on the production of such heavy particles can be interpreted in terms of 2HDM parameters [4,5,6], or in the framework of simplified models of dark matter [7, 8].

When using our \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) to determine a constraint on \(y_{\text {t}}\), we verified using a LO simulation that the signal acceptance is not affected by the relative contribution of the virtual Higgs boson Feynman diagrams. We take into account the dependence of the backgrounds on \(y_{\text {t}}\) by scaling the \(\text {t} {}{\overline{\text {t}}} \text {H} \) cross section by \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} |^2\) prior to the fit, where \(y_{\text {t}}^{\mathrm {SM}}\) represents the SM value of the top quark Yukawa coupling. As a result of the \(\text {t} {}{\overline{\text {t}}} \text {H} \) background rescaling, the measured \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) depends on \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} |\), as shown in Fig. 5. The measurement is compared to the theoretical prediction obtained from the LO calculation of Ref. [2], scaled to the \(12.0^{+2.2}_{-2.5}\,\text {fb} \) cross section obtained in Ref. [1], and including the uncertainty associated with doubling and halving the renormalization and factorization scales. Comparing the observed limit on \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) with the central, upper, and lower values of its theoretical prediction, we obtain 95% \(\text {CL}\) limits of \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} | < 1.7\), 1.4, and 2.0, respectively, an improvement over the previous CMS result [27]. Alternatively, assuming that the on-shell Yukawa coupling is equal to that of the SM, we do not rescale the \(\text {t} {}{\overline{\text {t}}} \text {H} \) background with respect to its SM prediction, and obtain corresponding limits on the off-shell Yukawa coupling of \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} | < 1.8\), 1.5, and 2.1. Since \(y_{\text {t}}\) affects the Higgs boson production cross section in both the gluon fusion and \(\text {t} {}{\overline{\text {t}}} \text {H} \) modes, constraints on \(y_{\text {t}}\) can also be obtained from a combination of Higgs boson measurements [74]. However, these constraints require assumptions about the total width of the Higgs boson, while the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)-based limit does not. For the \(\hat{H}\) interpretation, the BDT analysis is repeated using simulated samples of \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal events with different values of \(\hat{H}\) to account for small acceptance and kinematic differences, as described in Sect. 2. We rescale the \(\text {t} {}{\overline{\text {t}}} \text {H} \) cross section by \((1-\hat{H})^2\) to account for its \(\hat{H}\) dependency [11]. This results in the 95% \(\text {CL}\) upper limit of \(\hat{H} < 0.12\). For reference, the authors of Ref. [11] used recent LHC on-shell Higgs boson measurements to set a constraint of \(\hat{H} < 0.16\) at 95% \(\text {CL}\).

To study the off-shell effect of new particles with \(m < 2m_\text {t} \), we first consider neutral scalar (\(\phi \)) and neutral vector (\({\hbox {Z}}^{\prime } \)) particles that couple to top quarks. Such particles are at present only weakly constrained, while they can give significant contributions to the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section [9]. Having verified in LO simulation that these new particles affect the signal acceptance by less than 10%, we recalculate the \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) upper limit of the BDT analysis including an additional 10% uncertainty in the acceptance, and obtain the 95% \(\text {CL}\) upper limit of 23.0\(\,\text {fb}\) on the total \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section, slightly weaker than the 22.5\(\,\text {fb}\) limit obtained in Sect. 7. Comparing this upper limit to the predicted cross section in models where \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) production includes a \(\phi \) or a \({\hbox {Z}}^{\prime } \) in addition to SM contributions and associated interference, we set limits on the masses and couplings of these new particles, shown in Fig. 6. These limits exclude couplings larger than 1.2 for \(m_{\phi }\) in the 25–340\(\,\text {GeV}\) range and larger than 0.1 (0.9) for \(m_\mathrm{Z^{\prime }} = 25\) (300)\(\,\text {GeV}\).

We consider on-shell effects from new scalar and pseudoscalar particles with \(m > 2m_\text {t} \). At such masses, the production rate of these particles in association with a single top quark (\(\text {tq} \text {H}/\text {A} \), \(\text {tW} \text {H}/\text {A} \)) becomes significant, so we include these processes in addition to \(\text {t} \overline{\text {t}}\text {H}/\text {A} \). As pointed out in Ref. [6], these processes do not suffer significant interference with the SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) process. To obtain upper limits on the sum of these processes followed by the decay \(\text {H}/\text {A} \rightarrow \text {t} {}{\overline{\text {t}}} \), we use the BDT analysis and treat the SM \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) process as a background. Figure 7 shows the excluded cross section as a function of the mass of the scalar (left) and pseudoscalar (right). Comparing these limits with the Type-II 2HDM cross sections with \(\tan \beta = 1\) in the alignment limit, we exclude scalar (pseudoscalar) masses up to 470 (550)\(\,\text {GeV}\), improving by more than 100\(\,\text {GeV}\) with respect to the previous CMS limits [26]. Alternatively, we consider the simplified model of dark matter defined in Ref. [35], which includes a Dirac fermion dark matter candidate, \(\chi \), in addition to \(\text {H}/\text {A} \), and where the couplings of \(\text {H}/\text {A} \) to SM fermions and \(\chi \) are determined by parameters \(g_\mathrm {SM}\) and \(g_\mathrm {DM}\), respectively. In this model, exclusions similar to those from 2HDM are reached by assuming \(g_\mathrm {SM} = 1\) and \(g_\mathrm {DM} = 1\), and taking \(m_{\text {H}/\text {A}} < 2 m_\chi \). Relaxing the 2HDM assumption of \(\tan \beta = 1\), Fig. 8 shows the 2HDM limit as a function of \(\text {H}/\text {A} \) mass and \(\tan \beta \), considering one new particle at a time and also including a scenario with \(m_\text {H} = m_\text {A} \) inspired by a special case of Type-II 2HDM, the hMSSM [75]. Values of \(\tan \beta \) up to 0.8–1.6 are excluded, depending on the assumptions made. These exclusions are comparable to those of a recent CMS search for the resonant production of \(\text {H}/\text {A} \) in the \(\hbox {p} \rightarrow \text {H}/\text {A} \rightarrow \text {t} {}{\overline{\text {t}}} \) channel [76]. Relaxing the \(m_{\text {H}/\text {A}} < 2 m_\chi \) assumption in the dark matter model, Fig. 9 shows the limit in this model as a function of the masses of both \(\text {H}/\text {A} \) and \(\chi \), for \(g_\mathrm {DM} = 1\) and for two different assumptions of \(g_\mathrm {SM}\). Large sections of the phase space of simplified dark matter models are excluded, and the reach of this analysis is complementary to that of analyses considering decays of \(\text {H}/\text {A} \) into invisible dark matter candidates, such as those of Refs. [35, 77].

The observed \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) (solid line) and 95% \(\text {CL}\) upper limit (hatched line) are shown as a function of \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} |\). The predicted value (dashed line) [2], calculated at LO and scaled to the calculation from Ref. [1], is also plotted. The shaded band around the measured value gives the total uncertainty, while the shaded band around the predicted curve shows the theoretical uncertainty associated with the renormalization and factorization scales

The observed (points) and expected (dashed line) 95% \(\text {CL}\) upper limits on the cross section times branching fraction to \(\text {t} {}{\overline{\text {t}}} \) for the production of a new heavy scalar H (left) and pseudoscalar A (right), as a function of mass. The inner and outer bands around the expected limits indicate the regions containing 68 and 95%, respectively, of the distribution of limits under the background-only hypothesis. Theoretical values are shown for Type-II 2HDM in the alignment limit (solid line) and simplified dark matter (dot-dashed line) models

The observed (solid curve) and expected (long-dashed curve) 95% \(\text {CL}\) exclusion regions in the \(\tan \beta \) versus mass plane for Type-II 2HDM models in the alignment limit for a new scalar H (upper left), pseudoscalar A (upper right), and both (lower) particles. The short-dashed curves around the expected limits indicate the region containing 68% of the distribution of limits expected under the background-only hypothesis. The excluded regions are below the curves

Exclusion regions at 95% \(\text {CL}\) in the plane of \(m_\chi \) vs. \(m_{\text {H}}\) (left) or \(m_{\text {A}}\) (right). The outer lighter and inner darker solid curves show the expected and observed limits, respectively, assuming \(g_\mathrm {SM} = g_\mathrm {DM} = 1\). The excluded regions, shaded, are above the limit curves. The dashed lines show the limits assuming a weaker coupling between \(\text {H}/\text {A} \) and \(\chi \), \(g_\mathrm {DM} = 0.5\)

9 Summary

The standard model (SM) production of \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) has been studied in data from \(\sqrt{s} = 13\,\text {TeV}\) proton–proton collisions collected using the CMS detector during the LHC 2016–2018 data-taking period, corresponding to an integrated luminosity of 137\(\,\text {fb}^{-1}\). The final state with either two same-sign leptons or at least three leptons is analyzed using two strategies, the first relying on a cut-based categorization in lepton and jet multiplicity and jet flavor, the second taking advantage of a multivariate approach to distinguish the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) signal from its many backgrounds. The more precise multivariate strategy yields an observed (expected) significance of 2.6 (2.7) standard deviations relative to the background-only hypothesis, and a measured value for the \(\text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \) cross section of \(12.6^{+5.8}_{-5.2}\,\text {fb} \). The results based on the two strategies are in agreement with each other and with the SM prediction of \(12.0^{+2.2}_{-2.5}\,\text {fb} \) [1].

The results of the boosted decision tree (BDT) analysis are also used to constrain the top quark Yukawa coupling \(y_{\text {t}}\) relative to its SM value, based on the \(|y_{\text {t}} |\) dependence of \(\sigma (\hbox {pp} \rightarrow \text {t} {}{\overline{\text {t}}} \text {t} {}{\overline{\text {t}}} \)) calculated at leading order in Ref. [2], resulting in the 95% confidence level (\(\text {CL}\)) limit of \(|y_{\text {t}}/y_{\text {t}}^{\mathrm {SM}} | < 1.7\). The Higgs boson oblique parameter in the effective field theory framework [11] is similarly constrained to \(\hat{H} < 0.12\) at 95% \(\text {CL}\). Upper limits ranging from 0.1 to 1.2 are also set on the coupling between the top quark and a new scalar (\(\phi \)) or vector (\({\hbox {Z}}^{\prime } \)) particle with mass less than twice that of the top quark (\(m_\text {t} \)) [9]. For new scalar (\(\text {H} \)) or pseudoscalar (\(\text {A} \)) particles with \(m > 2m_\text {t} \), and decaying to \(\text {t} {}{\overline{\text {t}}} \), their production in association with a single top quark or a top quark pair is probed. The resulting cross section upper limit, between 15 and 35\(\,\text {fb}\) at 95% \(\text {CL}\), is interpreted in the context of Type-II two-Higgs-doublet models [4,5,6, 75] as a function of \(\tan \beta \) and \(m_\mathrm {\text {H}/\text {A}}\), and in the context of simplified dark matter models [7, 8], as a function of \(m_\mathrm {\text {H}/\text {A}}\) and the mass of the dark matter candidate.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Release and preservation of data used by the CMS Collaboration as the basis for publications is guided by the CMS policy as written in its document "MS data preservation, re-use and open access policy" (https://cmsdocdb.cern.ch/cgi-bin/PublicDocDB/RetrieveFile?docid=6032&filename=CMSDataPolicyV1.2.pdf&version=2).]

References

R. Frederix, D. Pagani, M. Zaro, Large NLO corrections in \(\mathrm{t\overline{t}}{\rm W}^{\pm }\) and \(\mathrm{t\overline{t}}\mathrm{t\overline{t}}\) hadroproduction from supposedly subleading EW contributions. JHEP 02, 031 (2018). https://doi.org/10.1007/JHEP02(2018)031. arXiv:1711.02116

Q.-H. Cao, S.-L. Chen, Y. Liu, Probing Higgs width and top quark Yukawa coupling from \(\mathrm{t\overline{t}}{\rm H}\) and \(\mathrm{t\overline{t}}\mathrm{t\overline{t}}\) productions. Phys. Rev. D 95, 053004 (2017). https://doi.org/10.1103/PhysRevD.95.053004. arXiv:1602.01934

Q.-H. Cao et al., Limiting top–Higgs interaction and Higgs–boson width from multi-top productions. (2019). arXiv:1901.04567

D. Dicus, A. Stange, S. Willenbrock, Higgs decay to top quarks at hadron colliders. Phys. Lett. B 333, 126 (1994). https://doi.org/10.1016/0370-2693(94)91017-0. arXiv:hep-ph/9404359

N. Craig et al., The hunt for the rest of the Higgs bosons. JHEP 06, 137 (2015). https://doi.org/10.1007/JHEP06(2015)137. arXiv:1504.04630

N. Craig et al., Heavy Higgs bosons at low tan \(\beta \): from the LHC to 100 TeV. JHEP 01, 018 (2017). https://doi.org/10.1007/JHEP01(2017)018. arXiv:1605.08744

G. Busoni et al., Recommendations on presenting LHC searches for missing transverse energy signals using simplified \(s\)-channel models of dark matter (2016). arXiv:1603.04156

A. Albert et al., Recommendations of the LHC Dark Matter Working Group: comparing LHC searches for heavy mediators of dark matter production in visible and invisible decay channels (2017). arXiv:1703.05703

E. Alvarez et al., Four tops for LHC. Nucl. Phys. B 915, 19 (2017). https://doi.org/10.1016/j.nuclphysb.2016.11.024. arXiv:1611.05032

N.P. Hartland et al., A Monte Carlo global analysis of the standard model effective field theory: the top quark sector. JHEP 04, 100 (2019). https://doi.org/10.1007/JHEP04(2019)100. arXiv:1901.05965

C. Englert, G. F. Giudice, A. Greljo, M. Mccullough, The \(\hat{H}\)-parameter: an oblique Higgs view (2019). arXiv:1903.07725

P. Ramond, Dual theory for free fermions. Phys. Rev. D 3, 2415 (1971). https://doi.org/10.1103/PhysRevD.3.2415

Y.A. Gol’fand, E.P. Likhtman, Extension of the algebra of Poincaré group generators and violation of P invariance. JETP Lett. 13, 323 (1971)

A. Neveu, J.H. Schwarz, Factorizable dual model of pions. Nucl. Phys. B 31, 86 (1971). https://doi.org/10.1016/0550-3213(71)90448-2

D.V. Volkov, V.P. Akulov, Possible universal neutrino interaction. JETP Lett. 16, 438 (1972)

J. Wess, B. Zumino, A Lagrangian model invariant under supergauge transformations. Phys. Lett. B 49, 52 (1974). https://doi.org/10.1016/0370-2693(74)90578-4

J. Wess, B. Zumino, Supergauge transformations in four dimensions. Nucl. Phys. B 70, 39 (1974). https://doi.org/10.1016/0550-3213(74)90355-1

P. Fayet, Supergauge invariant extension of the Higgs mechanism and a model for the electron and its neutrino. Nucl. Phys. B 90, 104 (1975). https://doi.org/10.1016/0550-3213(75)90636-7

H.P. Nilles, Supersymmetry, supergravity and particle physics. Phys. Rept. 110, 1 (1984). https://doi.org/10.1016/0370-1573(84)90008-5

S.P. Martin, A supersymmetry primer. In: Perspectives on Supersymmetry II, G. L. Kane, ed., p. 1. World Scientific, 2010. Adv. Ser. Direct. High Energy Phys., vol. 21. https://doi.org/10.1142/9789814307505_0001

G.R. Farrar, P. Fayet, Phenomenology of the production, decay, and detection of new hadronic states associated with supersymmetry. Phys. Lett. B 76, 575 (1978). https://doi.org/10.1016/0370-2693(78)90858-4

Particle Data Group, C. Patrignani et al., Review of particle physics. Chin. Phys. C 40, 100001 (2016). https://doi.org/10.1088/1674-1137/40/10/100001

CMS Collaboration, Search for the production of four top quarks in the single-lepton and opposite-sign dilepton final states in proton–proton collisions at \(\sqrt{s}=\) 13 TeV. JHEP (2019). arXiv:1906.02805

ATLAS Collaboration, Search for new phenomena in events with same-charge leptons and b-jets in pp collisions at \(\sqrt{s}= 13\) TeV with the ATLAS detector. JHEP 12, 039 (2018). https://doi.org/10.1007/JHEP12(2018)039. arXiv:1807.11883

ATLAS Collaboration, Search for four-top-quark production in the single-lepton and opposite-sign dilepton final states in pp collisions at \(\sqrt{s}\) = 13 TeV with the ATLAS detector. Phys. Rev. D 99, 052009 (2019). https://doi.org/10.1103/PhysRevD.99.052009. arXiv:1811.02305

C.M.S. Collaboration, Search for physics beyond the standard model in events with two leptons of same sign, missing transverse momentum, and jets in proton–proton collisions at \(\sqrt{s}=13\) TeV. Eur. Phys. J. C 77, 578 (2017). https://doi.org/10.1140/epjc/s10052-017-5079-z. arXiv:1704.07323

CMS Collaboration, Search for standard model production of four top quarks with same-sign and multilepton final states in proton–proton collisions at \(\sqrt{s} = 13\,\text{TeV} \). Eur. Phys. J. C 78, 140 (2018). https://doi.org/10.1140/epjc/s10052-018-5607-5. arXiv:1710.10614

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

T. Melia, P. Nason, R. Röntsch, G. Zanderighi, W\(^+\)W\(^-\), WZ and ZZ production in the POWHEG BOX. JHEP 11, 078 (2011). https://doi.org/10.1007/JHEP11(2011)078. arXiv:1107.5051

P. Nason, G. Zanderighi, \(\text{ W }^+ \text{ W }^-\), \(\text{ W } \text{ Z }\) and \(\text{ Z } \text{ Z }\) production in the POWHEG BOX V2. Eur. Phys. J. C 74, 2702 (2014). https://doi.org/10.1140/epjc/s10052-013-2702-5. arXiv:1311.1365

D. de Florian et al., Handbook of LHC Higgs cross sections: 4. deciphering the nature of the Higgs sector. CERN Report CERN-2017-002-M (2016). https://doi.org/10.23731/CYRM-2017-002. arXiv:1610.07922

C. Degrande, Automatic evaluation of UV and \({\rm R}_{2}\) terms for beyond the Standard Model Lagrangians: a proof-of-principle. Comput. Phys. Commun. 197, 239 (2015). https://doi.org/10.1016/j.cpc.2015.08.015. arXiv:1406.3030

N.D. Christensen et al., A comprehensive approach to new physics simulations. Eur. Phys. J. C 71, 1541 (2011). https://doi.org/10.1140/epjc/s10052-011-1541-5. arXiv:0906.2474

P.S. Bhupal Dev, A. Pilaftsis, Maximally symmetric two Higgs doublet model with natural standard model alignment. JHEP 12, 024 (2014). https://doi.org/10.1007/JHEP12(2014)024. arXiv:1408.3405. [Erratum: https://doi.org/10.1007/JHEP11(2015)147]

CMS Collaboration, Search for dark matter produced in association with a single top quark or a top quark pair in proton–proton collisions at \( \sqrt{s}=13 \) TeV. JHEP 03, 141 (2019). https://doi.org/10.1007/JHEP03(2019)141. arXiv:1901.01553

NNPDF Collaboration, Parton distributions for the LHC Run II. JHEP 04, 040 (2015). https://doi.org/10.1007/JHEP04(2015)040. arXiv:1410.8849

NNPDF Collaboration, Parton distributions from high-precision collider data. Eur. Phys. J. C 77, 663 (2017). https://doi.org/10.1140/epjc/s10052-017-5199-5. arXiv:1706.00428

T. Sjöstrand, S. Mrenna, P.Z. Skands, A brief introduction to PYTHIA 8.1. Comput. Phys. Commun. 178, 852 (2008). https://doi.org/10.1016/j.cpc.2008.01.036. arXiv:0710.3820

T. Sjöstrand et al., An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012

J. Alwall et al., Comparative study of various algorithms for the merging of parton showers and matrix elements in hadronic collisions. Eur. Phys. J. C 53, 473 (2008). https://doi.org/10.1140/epjc/s10052-007-0490-5. arXiv:0706.2569

R. Frederix, S. Frixione, Merging meets matching in MC@NLO. JHEP 12, 061 (2012). https://doi.org/10.1007/JHEP12(2012)061. arXiv:1209.6215

P. Skands, S. Carrazza, J. Rojo, Tuning PYTHIA 8.1: the Monash, tune. Eur. Phys. J. C 74(2014), 3024 (2013). https://doi.org/10.1140/epjc/s10052-014-3024-y. arXiv:1404.5630

CMS Collaboration, Event generator tunes obtained from underlying event and multiparton scattering measurements. Eur. Phys. J. C 76, 155 (2016). https://doi.org/10.1140/epjc/s10052-016-3988-x. arXiv:1512.00815

CMS Collaboration, Extraction and validation of a new set of CMS PYTHIA8 tunes from underlying-event measurements. Eur. Phys. J. C (2019). arXiv:1903.12179

GEANT4 Collaboration, Geant4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). https://doi.org/10.1016/S0168-9002(03)01368-8

CMS Collaboration, The CMS experiment at the CERN LHC. JINST 3, S08004 (2008). https://doi.org/10.1088/1748-0221/3/08/S08004

CMS Collaboration, The CMS trigger system. JINST 12, P01020 (2017). https://doi.org/10.1088/1748-0221/12/01/P01020. arXiv:1609.02366

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_{T}\) jet clustering algorithm. JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063. arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2. arXiv:1111.6097

C.M.S. Collaboration, Particle-flow reconstruction and global event description with the CMS detector. JINST 12, P10003 (2017). https://doi.org/10.1088/1748-0221/12/10/P10003. arXiv:1706.04965

C.M.S. Collaboration, Performance of electron reconstruction and selection with the CMS detector in proton–proton collisions at \(\sqrt{s}\) = 8 TeV. JINST 10, P06005 (2015). https://doi.org/10.1088/1748-0221/10/06/P06005. arXiv:1502.02701

C.M.S. Collaboration, Performance of CMS muon reconstruction in pp collision events at \(\sqrt{s}=7\) TeV. JINST 7, P10002 (2012). https://doi.org/10.1088/1748-0221/7/10/P10002. arXiv:1206.4071

M. Cacciari, G.P. Salam, Pileup subtraction using jet areas. Phys. Lett. B 659, 119 (2008). https://doi.org/10.1016/j.physletb.2007.09.077. arXiv:0707.1378

CMS Collaboration, Jet energy scale and resolution in the CMS experiment in pp collisions at 8 TeV. JINST 12, P02014 (2016). https://doi.org/10.1088/1748-0221/12/02/P02014. arXiv:1607.03663

CMS Collaboration, Jet algorithms performance in 13 TeV data. CMS Physics Analysis Summary CMS-PAS-JME-16-003 (2017)

CMS Collaboration, Performance of missing energy reconstruction in 13 TeV pp collision data using the CMS detector. CMS Physics Analysis Summary CMS-PAS-JME-16-004 (2016)

CMS Collaboration, Identification of heavy-flavour jets with the CMS detector in pp collisions at 13 TeV. JINST 13, P05011 (2018). https://doi.org/10.1088/1748-0221/13/05/P05011. arXiv:1712.07158

CMS Collaboration, Performance of missing transverse momentum reconstruction in proton–proton collisions at \(\sqrt{s} = 13\) TeV using the CMS detector. JINST 14, P07004 (2019). https://doi.org/10.1088/1748-0221/14/07/P07004. arXiv:1903.06078

CMS Collaboration, Search for new physics in same-sign dilepton events in proton–proton collisions at \(\sqrt{s} = 13\,\text{ TeV } \). Eur. Phys. J. C 76, 439 (2016). https://doi.org/10.1140/epjc/s10052-016-4261-z. arXiv:1605.03171

CMS Collaboration, Measurements of \(\mathrm{t\overline{t}}\) cross sections in association with b jets and inclusive jets and their ratio using dilepton final states in pp collisions at \(\sqrt{s}\) = 13 TeV. Phys. Lett. B 776, 355 (2018). https://doi.org/10.1016/j.physletb.2017.11.043. arXiv:1705.10141

CMS Collaboration, CMS technical design report for the pixel detector upgrade. Technical Report CERN-LHCC-2012-016 (2012)

CMS Collaboration, CMS luminosity measurements for the 2016 data taking period. CMS Physics Analysis Summary CMS-PAS-LUM-17-001 (2017)

CMS Collaboration, CMS luminosity measurement for the 2017 data-taking period at \(\sqrt{s} = 13~\rm TeV\). CMS Physics Analysis Summary CMS-PAS-LUM-17-004 (2018)

CMS Collaboration, CMS luminosity measurement for the 2018 data-taking period at \(\sqrt{s} = 13~\)TeV. CMS Physics Analysis Summary CMS-PAS-LUM-18-002 (2019)

CMS Collaboration, Measurement of the inelastic proton–proton cross section at \( \sqrt{s}=13 \) TeV. JHEP 07, 161 (2018). https://doi.org/10.1007/JHEP07(2018)161. arXiv:1802.02613

CMS Collaboration, Observation of \(\rm t\overline{\text{ t }}\)H production. Phys. Rev. Lett. 120, 231801 (2018). https://doi.org/10.1103/PhysRevLett.120.231801. arXiv:1804.02610

ATLAS and CMS Collaborations, Procedure for the LHC Higgs boson search combination in summer 2011. ATL-PHYS-PUB-2011-011, CMS NOTE-2011/005 (2011)

T. Junk, Confidence level computation for combining searches with small statistics. Nucl. Instrum. Methods A 434, 435 (1999). https://doi.org/10.1016/S0168-9002(99)00498-2. arXiv:hep-ex/9902006

A.L. Read, Presentation of search results: the \(\text{ CL }_\text{ s }\) technique. J. Phys. G 28, 2693 (2002). https://doi.org/10.1088/0954-3899/28/10/313

G. Cowan, K. Cranmer, E. Gross, O. Vitells, Asymptotic formulae for likelihood-based tests of new physics. Eur. Phys. J. C 71, 1554 (2011). https://doi.org/10.1140/epjc/s10052-011-1554-0. arXiv:1007.1727. [Erratum: 10.1140/epjc/s10052-013-2501-z]

ATLAS Collaboration, Measurement of the \(\mathrm{t\overline{t}}{\rm Z}\) and \(\mathrm{t\overline{t}}{\rm W}\) cross sections in proton–proton collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. Phys. Rev. D 99, 072009 (2019). https://doi.org/10.1103/PhysRevD.99.072009. arXiv:1901.03584

CMS Collaboration, Measurement of the cross section for top quark pair production in association with a W or Z boson in proton–proton collisions at \(\sqrt{s} =\) 13 TeV. JHEP 08, 011 (2018). https://doi.org/10.1007/JHEP08(2018)011. arXiv:1711.02547

CMS Collaboration, Measurement of top quark pair production in association with a Z boson in proton–proton collisions at \(\sqrt{s}=\) 13 TeV. JHEP (2019). arXiv:1907.11270

ATLAS and CMS Collaborations, Measurements of the Higgs boson production and decay rates and constraints on its couplings from a combined ATLAS and CMS analysis of the LHC pp collision data at \( \sqrt{s}=7 \) and 8 TeV. JHEP 08, 045 (2016). https://doi.org/10.1007/JHEP08(2016)045. arXiv:1606.02266

A. Djouadi et al., The post-Higgs MSSM scenario: Habemus MSSM? Eur. Phys. J. C 73, 2650 (2013). https://doi.org/10.1140/epjc/s10052-013-2650-0. arXiv:1307.5205

CMS Collaboration, Search for heavy Higgs bosons decaying to a top quark pair in proton–proton collisions at \(\sqrt{s} =\) 13 TeV (2019). JHEP arXiv:1908.01115

ATLAS Collaboration, Search for dark matter produced in association with bottom or top quarks in \(\sqrt{s}=13\) TeV pp collisions with the ATLAS detector. Eur. Phys. J. C 78, 18 (2018). https://doi.org/10.1140/epjc/s10052-017-5486-1. arXiv:1710.11412

Acknowledgements

We congratulate our colleagues in the CERN accelerator departments for the excellent performance of the LHC and thank the technical and administrative staffs at CERN and at other CMS institutes for their contributions to the success of the CMS effort. In addition, we gratefully acknowledge the computing centers and personnel of the Worldwide LHC Computing Grid for delivering so effectively the computing infrastructure essential to our analyses. Finally, we acknowledge the enduring support for the construction and operation of the LHC and the CMS detector provided by the following funding agencies: BMBWF and FWF (Austria); FNRS and FWO (Belgium); CNPq, CAPES, FAPERJ, FAPERGS, and FAPESP (Brazil); MES (Bulgaria); CERN; CAS, MoST, and NSFC (China); COLCIENCIAS (Colombia); MSES and CSF (Croatia); RPF (Cyprus); SENESCYT (Ecuador); MoER, ERC IUT, PUT and ERDF (Estonia); Academy of Finland, MEC, and HIP (Finland); CEA and CNRS/IN2P3 (France); BMBF, DFG, and HGF (Germany); GSRT (Greece); NKFIA (Hungary); DAE and DST (India); IPM (Iran); SFI (Ireland); INFN (Italy); MSIP and NRF (Republic of Korea); MES (Latvia); LAS (Lithuania); MOE and UM (Malaysia); BUAP, CINVESTAV, CONACYT, LNS, SEP, and UASLP-FAI (Mexico); MOS (Montenegro); MBIE (New Zealand); PAEC (Pakistan); MSHE and NSC (Poland); FCT (Portugal); JINR (Dubna); MON, RosAtom, RAS, RFBR, and NRC KI (Russia); MESTD (Serbia); SEIDI, CPAN, PCTI, and FEDER (Spain); MOSTR (Sri Lanka); Swiss Funding Agencies (Switzerland); MST (Taipei); ThEPCenter, IPST, STAR, and NSTDA (Thailand); TUBITAK and TAEK (Turkey); NASU and SFFR (Ukraine); STFC (UK); DOE and NSF (USA). Individuals have received support from the Marie-Curie program and the European Research Council and Horizon 2020 Grant, contract Nos. 675440, 752730, and 765710 (European Union); the Leventis Foundation; the A.P. Sloan Foundation; the Alexander von Humboldt Foundation; the Belgian Federal Science Policy Office; the Fonds pour la Formation à la Recherche dans l’Industrie et dans l’Agriculture (FRIA-Belgium); the Agentschap voor Innovatie door Wetenschap en Technologie (IWT-Belgium); the F.R.S.-FNRS and FWO (Belgium) under the “Excellence of Science – EOS” – be.h project n. 30820817; the Beijing Municipal Science & Technology Commission, No. Z181100004218003; the Ministry of Education, Youth and Sports (MEYS) of the Czech Republic; the Lendület (“Momentum”) Program and the János Bolyai Research Scholarship of the Hungarian Academy of Sciences, the New National Excellence Program ÚNKP, the NKFIA research Grants 123842, 123959, 124845, 124850, 125105, 128713, 128786, and 129058 (Hungary); the Council of Science and Industrial Research, India; the HOMING PLUS program of the Foundation for Polish Science, cofinanced from European Union, Regional Development Fund, the Mobility Plus program of the Ministry of Science and Higher Education, the National Science Center (Poland), contracts Harmonia 2014/14/M/ST2/00428, Opus 2014/13/B/ST2/02543, 2014/15/B/ST2/03998, and 2015/19/B/ST2/02861, Sonata-bis 2012/07/E/ST2/01406; the National Priorities Research Program by Qatar National Research Fund; the Ministry of Science and Education, Grant No. 3.2989.2017 (Russia); the Programa Estatal de Fomento de la Investigación Científica y Técnica de Excelencia María de Maeztu, Grant MDM-2015-0509 and the Programa Severo Ochoa del Principado de Asturias; the Thalis and Aristeia programs cofinanced by EU-ESF and the Greek NSRF; the Rachadapisek Sompot Fund for Postdoctoral Fellowship, Chulalongkorn University and the Chulalongkorn Academic into Its 2nd Century Project Advancement Project (Thailand); the Welch Foundation, contract C-1845; and the Weston Havens Foundation (USA).

Author information

Authors and Affiliations

Consortia

Rights and permissions