Abstract

In this study, the relation between two approaches to assess the ocean predictability on interannual to decadal time scales is investigated. The first pragmatic approach consists of sampling the initial condition uncertainty and assess the predictability through the divergence of this ensemble in time. The second approach is provided by a theoretical framework to determine error growth by estimating optimal linear growing modes. In this paper, it is shown that under the assumption of linearized dynamics and normal distributions of the uncertainty, the exact quantitative spread of ensemble can be determined from the theoretical framework. This spread is at least an order of magnitude less expensive to compute than the approximate solution given by the pragmatic approach. This result is applied to a state-of-the-art Ocean General Circulation Model to assess the predictability in the North Atlantic of four typical oceanic metrics: the strength of the Atlantic Meridional Overturning Circulation (AMOC), the intensity of its heat transport, the two-dimensional spatially-averaged Sea Surface Temperature (SST) over the North Atlantic, and the three-dimensional spatially-averaged temperature in the North Atlantic. For all tested metrics, except for SST, \(\sim\) 75% of the total uncertainty on interannual time scales can be attributed to oceanic initial condition uncertainty rather than atmospheric stochastic forcing. The theoretical method also provide the sensitivity pattern to the initial condition uncertainty, allowing for targeted measurements to improve the skill of the prediction. It is suggested that a relatively small fleet of several autonomous underwater vehicles can reduce the uncertainty in AMOC strength prediction by 70% for 1–5 years lead times.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Anthropogenic global warming has changed Earth’s climate over the last century (IPCC 2007, 2013) In this context, there is an ever increasing societal pressure to predict (as accurately as possible) climate changes from local to global scales and from seasonal to centennial time scales. Previous studies have suggested that on interannual to decadal time scales internal variability dominates the uncertainty in global temperature changes, whereas on multidecadal to centennial time scales the emission scenarios are more important (Hawkins and Sutton 2009; Branstator and Teng 2012; IPCC 2013). Because of its slow variability and its large heat content, the ocean can control climate variations on interannual to decadal time scales. For example, variability of the Atlantic Meridional Overturning Circulation (AMOC) can suppress anthropogenically forced warming in the North Atlantic (Drijfhout 2015). Hence predicting AMOC variations, and more generally the North Atlantic Ocean state is crucial for accurate climate predictions on interannual to decadal time scales. Moreover, because of its robust quasi-harmonic multi-decadal variation (Kushnir 1994; Frankcombe and Dijkstra 2009; Chylek et al. 2011), the North Atlantic is a perfect candidate for successful interannual to decadal climate prediction (Griffies and Bryan 1997).

Considering the chaotic nature of several components of the climate system, in particular the atmosphere (Lorenz 1963), a pragmatic approach has been widely used to determine climate predictability on different time scales (Hurrell et al. 2006; Meehl et al. 2009). This approach consists of acknowledging the uncertainty in initial conditions by taking an ensemble of nearby initial conditions and evaluating the predictability by computing the time evolution of the spread of this ensemble (e.g., Boer 2011). There are various strategies to accurately sample the initial condition uncertainty and to accurately define the ensemble (e.g., Persechino et al. 2013). However, except for a few dedicated studies (e.g., Du et al. 2012; Baehr and Piontek 2014; Germe et al. 2017b), the role of the oceanic uncertainties has often been overlooked in these ensemble design strategies, despite being a possible source of predictability (Hawkins et al. 2016).

Since the early work of Griffies and Bryan (1997), there has been a large body of work suggesting the predictability on interannual to decadal time scales of climatically relevant metrics of the North Atlantic Ocean, such as the AMOC (Collins and Sinha 2003; Pohlmann et al. 2004; Collins et al. 2006; Latif et al. 2006; Keenlyside et al. 2008; Msadek et al. 2010; Teng et al. 2011). For example, Branstator and Teng (2014) suggested predictability of up to 20 years for one AMOC mode of variability, whereas Hermanson and Sutton (2010) suggested an average predictability of 5 years for the annual AMOC. Although using different methods to assess predictability, these two examples illustrate the difficulty of a robust quantitative estimation of AMOC predictability. Hence, despite good qualitative progress in assessing the interannual to decadal predictability of the North Atlantic climate variability, the quantitative results still substantially differ between studies. This disagreements might be caused by the model uncertainty that has been showed to dominate on decadal time scale (Hawkins and Sutton 2009). Beyond this, one other hypothesis to explain the lack of quantitative agreement is the rather small number of members (\(\sim\) 10) used to build the ensembles (Sévellec and Sinha 2017). This number is far from being enough to systematically sample the initial condition uncertainty of the climate system (Deser et al. 2012). Hence another methodology is highly desirable to assess predictability on interannual to decadal time scales.

Generalized Stability Analysis has been developed to estimate the transient growth of small perturbations (GSA; Farrell and Ioannou 1996a, b) rather than their asymptotic growth, as used in classical Linear Stability Analysis (Strogatz 1994) This generalization to finite time development makes GSA particularly well suited to study predictability (Palmer 1999). Hence, in the context of the North Atlantic there has been a wide range of applications with this method, from idealized ocean models to fully coupled ones (Tziperman and Ioannou 2002; Zanna and Tziperman 2008; Alexander and Monahan 2009; Hawkins and Sutton 2011) and even observations (Zanna 2012). Sévellec et al. (2007) suggested a subtle but fundamental modification of the GSA: instead of estimating the maximum growth of perturbations through a quadratic norm, one can estimate the maximum change in any linear combination of the state-variables.

This modification simplifies the solution going from an eigenvalues problem (i.e., singular value decomposition, hard to tackle in a state-of-the-art climate model) to an explicit solution (i.e., Linear Optimal Perturbation—LOP, Sévellec et al. 2007). This allowed the study of a wide range of climatically relevant problems of the North Atlantic from idealized ocean models (Sévellec et al. 2007) to state-of-the-art ocean general circulation models (Sévellec and Fedorov 2017). Also, through this new formulation of GSA, changes are not restricted to non-normal growths and are easily comparable to the pragmatic ensemble approach based on perturbing initial conditions.

These methods are particularly useful to determine regions of sensitivities to ocean initial conditions (Montani et al. 1999; Leutbecher et al. 2002). They have been shown to be extremely efficient in improving typhoon forecasts (Qin and Mu 2011; Zhou and Mu 2011), for instance. Hence, in the context of the AMOC, Wunsch (2010) and Heimbach et al. (2011) suggested the use of these methods to assess regions where enhanced sampling strategy can improve prediction. Alternative methods to assess the effect of enhanced sampling strategies have also been proposed, such as initializing different layers of the ocean (Dunstone and Smith 2010) but these methods are not yet able to determine the most sensitive region with the same level of details.

In this study, the relation between the two approaches is discussed and it is shown that under two assumptions (linearized dynamics and normal distribution of uncertainties), the spread of an ensemble can be retrieved from a theoretical framework. Unlike the ensemble simulation strategy, the theoretical framework provides an exact quantitative estimate of the predictability (measured through the Predictive Power), as it does not depend on the arbitrary number of members like the ensemble method. In Sect. 2, we develop the method for a quantitative assessment of the predictability and illustrate the solution using an idealized stochastic model. In Sect. 3, we apply the solution to a state-of-the-art ocean general circulation model (GCM) for four ocean metrics related to the AMOC. Applications to the design of efficient monitoring systems for climate prediction are given in Sect. 4. A discussion on the limits of the methods, conclusions and directions for future work are included in Sect. 5.

2 Theory

2.1 Propagating errors in a linear framework

Here we discuss the mathematical framework of our method. Readers more interested in its application to the North Atlantic Ocean circulation can skip this section.

The prognostic equations of the full nonlinear ocean model can, after discretization, be written as a general non-autonomous finite-dimensional dynamical system:

where \(\mathcal {N}\) are time-dependent nonlinear terms and the forcing of the model, \(\left| {{\varvec{U}}} \right\rangle\) is a state vector consisting of all prognostic variables, and t is time. The state vector is comprised of the three dimensional fields of temperature, salinity, and zonal and meridional velocities, together with the two dimensional field of barotropic stream function. Since we study a finite-dimensional vector space, we can also define a dual vector \(\left\langle {{\varvec{U}}} \right|\) through the Euclidian scalar product \(\left\langle {{\varvec{U}}|{\varvec{U}}} \right\rangle\).

We decompose the state vector as \(\left| {{\varvec{U}}} \right\rangle\) = \(\left| {{{\bar{\varvec{U}}}}} \right\rangle\) + \(\left| {{\varvec{u}}} \right\rangle\), where \(\left| {{{\bar{\varvec{U}}}}} \right\rangle\) is the nonlinear annually varying trajectory (i.e., the climatological background state) and \(\left| {{\varvec{u}}} \right\rangle\) is a perturbation. Under the assumptions of small perturbations and following Farrell and Ioannou (1996b), the temporal evolution of the perturbation follows a linear equation:

where \({{\mathsf {{A}}}}(t)\) is a Jacobian matrix (a function of the trajectory \(\left| {{{\bar{\varvec{U}}}}} \right\rangle\)) and \(\left| {{\varvec{f}}^\mathrm{sto}\left( t\right) }\right\rangle\) is an anomalous stochastic forcing imposed by the atmospheric synoptic noise, now made explicit. We also define an adjoint to the Jacobian matrix \({{\mathsf {{A}}}}^\dagger\) as \(\langle {{\varvec{a}}|{{\mathsf {{A}}}}|{\varvec{b}}} \rangle\) = \(\langle {{\varvec{b}}|{{\mathsf {{A}}}}^\dagger |{\varvec{a}}} \rangle\), where \(\left| {{\varvec{a}}} \right\rangle\) and \(\left| {{\varvec{b}}} \right\rangle\) are two anomalous state vectors.

We can integrate (2) to obtain an explicit expression for the perturbation as a function of time (Farrell and Ioannou 1996b)

where \(\left| {{\varvec{u}}^\mathrm{ini}(t)} \right\rangle\) is the perturbation at time t induced by the initial disturbance at time \(t_i\), such that \(\left| {{\varvec{u}}(t_i)} \right\rangle\) = \(\left| {{\varvec{u}}^\mathrm{ini}(t_i)} \right\rangle ;\) \(\left| {{\varvec{u}}^\mathrm{sto}(t)} \right\rangle\) is the perturbation induced by the stochastic forcing; and \({{\mathsf {{M}}}}(t,t_i)\) is called the propagator of the linearized dynamics from the initial time \(t_i\) to a time t. In general the propagator does not commute with its adjoint, \({{\mathsf {{M}}}}^\dagger (t_i,t){{\mathsf {{M}}}}(t,t_i) \ne {{\mathsf {{M}}}}(t,t_i){{\mathsf {{M}}}}^\dagger (t_i,t)\), in which case the dynamics is said to be non-normal.

2.2 Ensemble spread and predictability

To evaluate the ocean state predictability we will focus on physical metrics of the form \(\left\langle {{\varvec{F}}|{\varvec{u}}} \right\rangle\), where \(\left| {{\varvec{F}}} \right\rangle\) is a vector defining the cost function. The derivation below is adapted from the theoretical framework set by Chang et al. (2004) to fit the specific goals of our analysis.

Hence, according to (3), we can also follow the evolution of any metrics through the expression:

We use the variance to evaluate the spread of the ensemble. The independence of initial oceanic disturbances and atmospheric stochastic disturbances suggests that their covariance is weak and can be neglected. Hence, we obtain:

where \(\sigma ^2_\text {ini}\) and \(\sigma ^2_\text {sto}\) are the variances induced by a large sets of N initial oceanic disturbances (\(\left| {{\varvec{u}}^\mathrm{ini}_k(t_i)} \right\rangle\)) and of corresponding surface atmospheric disturbances (\(\left| {{\varvec{f}}^\mathrm{sto}_k(s)} \right\rangle\)), respectively (k being the index of the random realization or of the individual member in the ensemble).

These two variances can be expressed as:

since \(\lim _{N\rightarrow \infty }\sum _{k=1}^N\left\langle {{\varvec{F}}|{\varvec{u}}^\mathrm{ini}_k(t)} \right\rangle\) = 0 and \(\lim _{N\rightarrow \infty }\sum _{k=1}^N\left\langle {{\varvec{F}}|{\varvec{u}}^\mathrm{sto}_k(t)} \right\rangle\)=0 by definition of the linear framework. Hence the spread of the ensemble is

The Eqs. (7) suggest that to measure the spread of initial and stochastic disturbances we can either propagate all the N initial and stochastic disturbances and project them onto the cost function or propagate (with the adjoint) the cost function only once and project it on the N initial and stochastic disturbances. Obviously, the latter is a lot more efficient and will even allow to rebuild the entire probability density function (PDF). Indeed, the application of a moment (here the second moment or variance) is applied as a post-computation diagnostic. Hence, this demonstration can be reproduced for any statistical moment (not only the variance), so that the statistical properties of the oceanic initial condition and atmospheric stochastic forcing uncertainties can be propagated in the same way. Applying this procedure on any statistical moment of the PDF (\(\mu _n^\mathrm{ini}\) and \(\mu _n^\mathrm{sto}\)), we obtain:

Coming back to the special case of the variance, and acknowledging that \(\left\langle {{\varvec{F}}|{\varvec{v}}} \right\rangle ^2\)=\(\left\langle {{\varvec{F}}|{\varvec{v}}} \right\rangle \left\langle {{\varvec{v}}|{\varvec{F}}} \right\rangle\) (where \(\left| {{\varvec{v}}} \right\rangle\) is any disturbance), (7) can be even more efficiently expressed as:

where \({\mathsf {\varvec{\Sigma }}}_\text {ini}\) and \({\mathsf {\varvec{\Sigma }}}_\text {sto}\) are the variance matrices for initial and stochastic disturbances, respectively. Here we have used the properties of the white noise, both for oceanic initial condition and atmospheric stochastic disturbances: \(\left\langle {{\varvec{u}}^\mathrm{ini}_k(t_i)|{\varvec{u}}^\mathrm{ini}_l(t_i)} \right\rangle\) = \(\sigma ^2_\text {ini}\delta _{k,l}\) and \(\left\langle {{\varvec{f}}^\mathrm{sto}_k(s)|{\varvec{f}}^\mathrm{sto}_l(s')} \right\rangle\) = \(\sigma ^2_\text {sto}\delta _{k,l}\delta (s-s')\) where \(\delta _{k,l}\) is the Kronecker delta and \(\delta (s-s')\) is the Dirac delta function. The \({\mathsf {\varvec{\Sigma }}}_\text {ini}\) and \({\mathsf {\varvec{\Sigma }}}_\text {sto}\) matrices are diagonal where the terms on the diagonal are the variances of the uncertainty at each particular locations. It is important to note that these variance matrices do not provide a norm but a pseudo norm, since local variances can be zero, leading to singularities in the operators. These Eqs. (9) demonstrate that the knowledge of the oceanic initial condition and atmospheric stochastic forcing variances, together with the propagation of the cost function by the adjoint is enough to diagnose at all times the evolution of the system variance measured through the cost function.

2.3 Application to an idealized stochastic model

To illustrate our ability to compute the ensemble spread without running an ensemble we apply our method to an idealized stochastic model of anomalous sea surface temperature (SST), where both oceanic initial condition uncertainty (white noise with \(\sigma _\text {SST}\) = 5 \(\times\) 10\(^{-2}\) K) and atmospheric stochastic forcing (white noise with \(\sigma _\text {SAT}\) = 0.3 K) are imposed. The stochastic idealized model is derived from Hasselmann (1976) and Frankignoul and Hasselmann (1977), and reads:

where \(\text {SST}_k\) and \(\text {SAT}_k\) are random realizations of anomalous sea surface temperature and surface atmospheric temperature, respectively; k is the index of the random realization or of the individual member of the ensemble, and \(\gamma\) is the inverse of an oceanic damping time scale set to 10 years.

Using this model we compute the ensemble spread through (9). Indeed, the solution of this idealized model of a Langevin equation is an Ornstein–Uhlenbeck process which can be determined analytically (see for example section 3.4 in Dijkstra 2013). Hence, we obtain the evolution of the oceanic uncertainties in the context of the idealized model as:

To validate the analytical result we also diagnose the total uncertainty from a large ensemble of 1000 members (differing in their random realization of both oceanic initial condition uncertainty—\(\text {SST}_k(0)\)—and atmospheric stochastic forcing—\(\text {SAT}_k(t)\), Fig. 1a). As expected these two diagnostics are identical (Fig. 1b), although the former is far more quantitatively accurate and computationally efficient (a single simulation vs 1000 ones). Also, (9) allows us to attribute at each lead time the relative contribution of the two uncertainties in the total variance (Fig. 1c), which cannot be disentangled robustly with a classical forward integration of a large ensemble.

a Increase in anomalous sea surface temperature uncertainty for the idealized stochastic model descibed in Eq. (10). Each grey lines correspond to individual members— \(\text {SST}_k(t)\). Thick black line is the ensemble mean, and thin black lines together with the grey shaded region represent plus/minus one ensemble standard deviation. b The ensemble spread (thick black line) is equivalent to the theoretical result (red dashed line) following the sum of Eqs. (11a) and (11b), whereas the perfect case (thin black line) represents the spread when initial condition uncertainty is ignored, uncertainty being restricted to the stochastic forcing following Eq. (11b). c Fraction of the variance between the two sources of uncertainty: (red) the atmospheric stochastic forcing following Eq. (11b) and (blue) the oceanic initial condition following Eq. (11a)

3 Application to a detailed ocean GCM

3.1 Experimental set-up

To apply the exact same procedure as in Sect. 2.3, but now in a more realistic setting, we use a forced ocean GCM (NEMO-OPA 8.2, Madec et al. 1998) in a global realistic configurations (with a horizontal resolution of 2\(^\circ \times\) 2 \(^\circ\) and 31 vertical levels with a level distance ranging from 10 to 500 m, Madec and Imbard 1996). We also use its tangent and adjoint components (OPATAM, Weaver et al. 2003). The combined model configuration follows Sévellec and Fedorov (2017) under flux boundary condition. We refer the reader to this study for further details of the model configuration and the climatological background state used here.

Despite being the typical configuration used in current climate prediction systems and in the last Coupled Model Intercomparison Project (CMIP5, Taylor et al. 2012), the use of non-eddy-resolving model is not trivial. Indeed the ocean chaotic behaviour is absent from this low resolution model. However, the oceanic solutions of these type of models remain irregular through ocean–atmosphere interactions and the propagation of the atmospheric uncertainty in the (almost-laminar) ocean (Germe et al. 2017b). As a result, in this study we assume that the oceanic uncertainty is forced by the chaotic nature of the atmosphere.

We select four typical ocean metrics of the North Atlantic. The intensity of the AMOC (MVT, measured as the meridional volume transport above 1500 m at 50\(^\circ\)N), the meridional heat transport (MHT, measured at 25\(^\circ\)N), the spatially-mean SST (SST, average from 30\(^\circ\)N to 70\(^\circ\)N) and the oceanic heat content (OHC, measured as the mean temperature from 30\(^\circ\)N to 70\(^\circ\)N and from the surface to the bottom of the ocean).

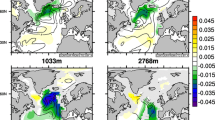

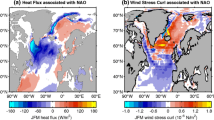

The two sources of uncertainty are assumed to be represented by white noise. For the oceanic initial condition uncertainty, given our relative poor knowledge of its true nature (Germe et al. 2017a) we choose a spatially-uniform white noise. The intensity of this spatially-uniform white noise was determined from typical uncertainties in ocean observations. To this end we used errors estimate from Argo float measurements in the North Atlantic (Debruyères et al. 2016). This dataset provides 10-day mean uncertainties after objective interpolation on a 2\(^\circ\) by 2\(^\circ\) and 20 dB uniform grid over the 2004–2015 period. From this dataset we have built a distribution (regardless of the time or of the horizontal and vertical locations of the uncertainties) for the mean ORCA2-grid volume after assuming the independence of the uncertainties. To estimate a typical uncertainty we compute the mode (most frequent value), the median and the mean of this error distribution. We obtained 0.001, 0.05 and 0.13 K for these measures. (Note that typical error values for salinity are roughly equivalent after rescaling them in term of temperature, using a linear equation of state for seawater: 0.005, 0.04, and 0.12 K.) Hence, to cover the full range of uncertainties, we use three intensities: low with a standard deviation of 0.025 K, medium with 0.05 K and high with 0.1 K. These values allow us to test the role of this parameter and explore different plausible values. For the atmospheric stochastic forcing, we also assume a white noise representation. The uncertainty intensity follows the standard deviation of SST and Sea Surface Salinity (SSS) diagnosed from a long control simulation of the IPSL coupled model (Fig. 2), which used NEMO as its oceanic component (Mignot et al. 2013). To convert this temperature and salinity variations into tendency variations we scale them with a typical atmospheric synoptic timescale of 7 days.

a, b Standard deviation of SST and SSS, respectively, from a long simulation of the IPSL model (Mignot et al. 2013). This standard deviations are used has the intensity of the atmospheric stochastic forcing (note that the atmospheric stochastic forcing is applied globally, despite being represented here only in the North Atlantic, region of interest of the study)

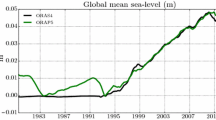

3.2 Error growth attribution

Using this experimental set-up together with (9), we are able to diagnose the exact theoretical growth of the uncertainty (Fig. 3a1–4) and can attribute the contribution of oceanic initial condition and atmospheric stochastic forcing to the total uncertainty (Fig. 3b1–4). To compare our results with previous studies we also diagnose the Predictive Power such as PP = \(1-\sigma ^2(t)/\sigma ^2_\infty\) (Schneider and Griffies 1999), where \(\sigma ^2_\infty\) is diagnosed as the variance of the ensemble spread in (5) evaluated at t = 40 year for a given metric (Fig. 3c1–4). This diagnostic is 0 if the uncertainty reaches its asymptotic value and 1 if negligible (negative values suggest that the uncertainty exceed asymptotic value).

a1–4 Growth in the uncertainty using NEMO for four metrics of the North Atlantic ocean: a–c1 meridional volume transport at 50\(^\circ\)N and 1500 m depth (MVT), a–c2 meridional heat transport at 25\(^\circ\)N (MHT), a–c3 sea surface temperature averaged in the Atlantic from 30\(^\circ\)N to 70\(^\circ\)N (SST), and a–c4 temperature averaged in the Atlantic from 30\(^\circ\)N to 70\(^\circ\)N and from surface to bottom (OHC). The thick solid lines correspond to a uniform uncertainty in the oceanic initial condition of (blue—low) 0.025 K, (purple—medium) 0.05 K, and (red—high) 0.1 K of standard deviation. The black thin line represents the perfect case where the oceanic initial condition uncertainties are neglected. b1–4 Attribution of uncertainty growth between atmospheric stochastic forcing and oceanic initial condition, using the medium uncertainty for the latter (0.05 K). c1–4 as a1–4 but for predictive power [PP = \(1-\sigma ^2(t)/\sigma ^2_\infty\), where \(\sigma ^2_\infty\) is evaluated at 40 years]

Our analysis shows that the uncertainty for the SST metric is almost equal to its asymptotic value after a few years (Fig. 3a3). Also, the oceanic initial condition uncertainty does not seem to play an important role for SST (Fig. 3b3). This suggests that the atmospheric synoptic noise is the main driver of the error growth. This leads to a rather weak Predictive Power over the 40 years tested (Fig. 3c3), suggesting that our ability to predict (i.e., potential prediction skill of) SST is restricted to values of less than 20% of its long-term variance and to interannual time scales. We emphasize, however, that this metric is not well represented in a forced ocean context. Hence conclusions from this experimental set-up might not be directly applicable to the coupled climate system.

For the three other metrics (MVT, MHT, and OHC) the uncertainty reaches its asymptotic value on much slower, multidecadal time scales (Fig. 3a1, 2, and 4). For OHC, however, the standard deviation is still slightly increasing at 40 years. This might be problematic since \(\sigma ^2(40~years)<\sigma ^2_\infty\), which potentially lead to an underestimation of the predictive power. Unlike SST, the OHC metric is also sensitive to the oceanic initial condition uncertainty. In the perfect case (absence of oceanic initial condition uncertainty), the error growth increases almost monotonically with time (Fig. 3a4), leading to an almost linear decrease of the Predictive Power from 1 to 0 over the 40 years tested (Fig. 3c4). This suggests that, in the absence of oceanic initial condition uncertainty, OHC has a predictive skill that remains above 80% up to a decade, above 50% up to 20 years, and below 20% after 3 decades. When not neglected the impact of oceanic initial condition uncertainty are mainly occurring over the first two decades (Fig. 3a4). Depending on the intensity of this error it can significantly impact the Predictive Power (Fig. 3c4). In the most extreme case it suggests the absence of potential prediction skill after only 5 years. In this case it induces a slight overshoot (a variance bigger than the asymptotic value) of the OHC variance peaking between 10 and 20 years. This implies that an initial error of such an intensity has huge repercussion on prediction systems by pushing them beyond their natural attractor. However, using the average value for the oceanic uncertainty, we find that it dominates the error growth on time scales up to 15 years, with a maximum impact of 75% of the error growth on interannual time scales (Fig. 3b4). This suggests that accurate oceanic initial condition can improve significantly the potential prediction skill of OHC on interannual to decadal time scales.

The two last metrics (MVT and MHT) show the same overall behaviours, but differ from OHC. In the absence of oceanic initial condition uncertainty, the two error growths increase with time until a saturation value is reached around 20–30 years (Fig. 3a1–2). This reflects on the predictive powers as an almost monotonous decrease in the exception of a plateau over the first \(\sim\) 5 years (Fig. 3c1–2). When an oceanic initial condition uncertainty is applied the instantaneous error growth of the two metrics becomes huge, regardless of the applied intensity (Fig. 3a1–2). This suggests that initial error on the oceanic field can lead to an overshoot of MVT and MHT variability, even for relatively weak intensity (0.025 K). This is of importance for prediction systems since it suggests that such an error can push the system beyond its natural attractor. This result is consistent with the analysis of Sévellec and Fedorov (2017). They demonstrated that small spatial-scale disturbances of the density field have important impacts on both MVT and MHT, because MVT and MHT are controlled by local East-West density differences (this result is summarized in the “Appendix”). Consequently, oceanic initial condition uncertainty removes any predictability on short interannual time scales, regardless of its intensity (Fig. 3c1–2). However, these small spatial-scale disturbances disappear quickly because of the relatively fast effect of horizontal diffusion on them (Sévellec and Fedorov 2017). This leads to a “sweet spot” for prediction around 5–10 years for meridional volume and heat transport. This originates from the sharp decrease of the oceanic uncertainty and the relatively slow increase of the atmospheric forcing uncertainty. Hence on interannual to decadal time scales the oceanic initial condition uncertainty dominates the error growth, whereas on decadal to multidecadal time scales the error growth is dominated by the atmospheric stochastic forcing for both MVT and MHT (Fig. 3b1–2). This suggests that MVT and MHT interannual to decadal predictions can be improved by a more accurate oceanic initialization.

It should be noted, however, that in a chaotic turbulent ocean, the sharp decrease in oceanic uncertainty might probably not take place. While the role of a small change in initial condition would still decrease on longer time-scales, stochastic internal noise from ocean turbulence should enhance oceanic uncertainty when time progresses. Nevertheless, this “sweet spot” for prediction that arises in laminar ocean models, suggests that in the real ocean predictions 5–10 years ahead are still probably the most valuables in terms of signal to noise ratio.

4 An optimal monitoring system

4.1 Method

As mentioned earlier, to decrease the overall uncertainty, and so to increase potential predictability, we can reduce the oceanic initial condition uncertainty (unlike the stochastic atmospheric forcing uncertainty that will remain). This is particularly true for MVT, MHT and OHC, where oceanic initial condition uncertainty dominates the variance growth over interannual time scales. This reduction can be accomplished by a better monitoring system of the ocean state (i.e., accurate measurement of temperature and salinity). Here we show a way to design such an efficient monitoring system.

For this purpose we use the linear optimal perturbation framework. The formulation of LOP is summarized in the "Appendix" and we refer the reader to Sévellec et al. (2007) and Sévellec and Fedorov (2017) for further details. The LOP framework comprises the computation of the pattern of sensitivity to initial conditions for a given linear metric (such as MVT, MHT, SST and OHC). The LOP depends on the lag between the initial condition perturbation and the metric response (examples of LOPs for different lags and for the four cost functions are shown in Figs. 7, 8, 9, 10). As we will demonstrate, the LOPs are directly relevant for the design of monitoring system.

We first define the optimal perturbation for a given cost function (i.e., the pattern of temperature and salinity anomalies that leads to the biggest change in the given cost function). Following the "Appendix", we obtain:

where \({{\mathsf {{S}}}}\) and \(\epsilon\) are a norm and a parameter setting a global normalization constraint on the LOP at time \(t_i\), such as \(\left\langle {{\varvec{u}}^\mathrm{opt}(t_i)|{{\mathsf {{S}}}}|{\varvec{u}}^\mathrm{opt}(t_i)} \right\rangle\) = \(\epsilon ^2\). The system’s response to the optimal perturbation gives the optimal variance and reads:

Combining (9a) and (12), one can rewrite the variance growth for any set of initial conditions as:

One can easily check this expression by substituting (12) into the numerator of the second term on the right hand side of (14) and using (13) and (9a). We define a re-normalization factor, \(\delta\), as:

where \(\delta\) has the same unit as \(\left| {{\varvec{u}}} \right\rangle\) and \(\epsilon\). Using (13), we can then express the error growth linked to oceanic initial conditions as:

This suggests that the variance of a set of initial conditions can be inferred from the optimal variance after applying a re-normalization factor (based on the local intensity of the initial condition uncertainty and the projection of it onto the optimal perturbation). This re-normalization factor is thus a way to go from a global initial uncertainty to the local one.

This last expression allows us to write the predictive power as:

where \(\sigma _\infty ^2\) = \(\sigma ^2(\infty )\) = \(\sigma _\text {sto}^2(\infty )\), since \(\lim _{t\rightarrow \infty }\sigma _\text {ini}^2(t)\) = 0 (i.e., the system is asymptotically stable, Sévellec and Fedorov 2013). This leads to the property that

This gives a lower and upper theoretical bounds to the predictive power.

This result has important consequences for the design of efficient monitoring systems. Indeed, increasing measurements in regions of high values of the LOP will decrease the initial condition uncertainty in those regions, hence reducing the re-normalization factor, since the latter is the norm of the LOP to the initial condition uncertainty. This will naturally increase the Predictive Power to its ideal value: \(\text {PP}_\text {Perfect}\) = \(1-\sigma _\text {sto}^2(t)/\sigma _\infty ^2\), since \(\lim _{\delta ^4\rightarrow 0}\sigma _\text {ini}^2(t)\) = 0. This result also demonstrates the usefulness of data-targeting: one should decrease the uncertainty in regions of high intensity of the LOP.

We have tested this approach in the ocean GCM and found that data-targeting is indeed particularly efficient at decreasing the error growth. To define the optimal monitoring system we have normalized the time integral from 0- to 40-years delay of absolute temperature and salinity patterns obtained from the LOPs. This gives us a relative sensitivity to initial condition in terms of temperature and salinity at all time scales that can be mathematically expressed as:

where T and S are the optimal pattern of the LOP in terms of temperature and salinity from \(\left| {{\varvec{u}}^\mathrm{opt}(t_i)} \right\rangle\) and OOD is the Optimal Observation Density (Fig. 4).

a1–4 Error growth and b1–4 optimal monitoring system following Eq. (19) in the upper (0–2056 m) and c1–4 deep ocean (2056–4001 m) for a–c1 MVT, a–c2 MHT, a–c3 SST, and a–c4 OHC metrics. Note that the definition of the deep ocean is below typical Argo floats coverage. a1–4 The error growth decreases when uncertainty in the oceanic initial condition is decreased, from a uniform uncertainty of (thick black lines) 0.05 K of standard deviation, by removing uncertainty in region of high optimal observation density: no uncertainty for (green thin lines) OOD > 0.5, (brown thin lines) OOD > 0.25, and (red thick lines) OOD > 0.1. The decrease converges to the perfect case, where no uncertainty in the oceanic initial conditions is assumed (black thin lines)

Hence assuming no uncertainty in oceanic initial conditions in regions of high OOD (and modifying \({\mathsf {\varvec{\Sigma }}}_\text {ini}\) accordingly) we see a decrease in error growth, which converges to the perfect case (where oceanic initial condition uncertainty is neglected). This applies to all prediction time scales (Fig. 4a). Significant improvement is especially possible for the MVT with an optimal monitoring system that is quite narrow and mainly located in the Labrador Sea (Fig. 4b1, c1). SST does not show any improvement (Fig. 4a3). This is expected given the strong control of atmospheric stochastic forcing over error growth in SST (Fig. 3b3). MHT and OHC improvement remains possible (Fig. 4a2, a4) but the rather large scale spread of their OOD (Fig. 4b–c2, b–c4) suggests technical difficulty in monitoring accurately such large regions of the ocean.

4.2 Applicability to in situ measurements

The knowledge of OOD is extremely useful for fundamental understanding of the sensitivity regions of the ocean. However, it remains the question of the feasibility of its development, even as a guide to future monitoring systems. Hence it is fundamental to relate it to current in situ observational systems and in particular to their technological limitations.

In this context, it is interesting to note the high intensity of OOD below 2000 m (Fig. 4c1–4, especially for OHC metric) which is currently the typical maximum depth of Argo float temperature and salinity measurements. This result, which has already been suggested in a wide range of studies (Wunsch 2010; Dunstone and Smith 2010; Heimbach et al. 2011; Germe et al. 2017b), implies that the maximum improvement in prediction skill can only be gained by also accurately monitoring the deep ocean, as soon possible with the development of Deep Argo floats.

Alternatively, monitoring arrays or specific scientific cruise transects can also act to reduce the uncertainty. To diagnose the best latitudinal location in the North Atlantic, we have computed the zonal and depth averages of the OOD for each metrics (Fig. 5). Our study suggests that, to be the most efficient to reduce prediction uncertainty, transects should be located around 55\(^\circ\)N for MVT (northward to the MVT measurement at 50\(^\circ\)N), whereas 35\(^\circ\)N–45\(^\circ\)N is the most efficient latitudinal band for MHT, SST and OHC. This suggests that the most efficient latitudes are always in the subpolar region rather than in the subtropical one (even for MHT despite being estimated at 25\(^\circ\)N). In particular, our study suggests the usefulness of the OSNAP array (located between 53\(^\circ\)N and 60\(^\circ\)N, Lozier et al. 2016) compared to the RAPID array (located at 26.5\(^\circ\)N, McCarthy et al. 2012) to reduce prediction uncertainties of the MVT metrics.

Beyond these more classical ocean monitoring systems, our method can also provide guidance for more innovative in situ ocean measurements, such as glider journeys. To this purpose we estimate the area covered by a single glider over a month. Following Wood (2010) and Gafurov and Klochkov (2015) typical travel speed for Deepglider is 0.25 m s\(^{-1}\) and assuming a 160 km autocorrelation length scale for oceanic observations (Purkey and Johnson 2010) we find: \(\sim\)100,000 km\(^2\). On the other hand, from ODD we can compute the decrease of uncertainty per area monitored assuming that all depth are monitored as now possible with Deepgliders. Comparing these two estimates gives us the reduction for a glider fleet size for the MVT error gowth (Fig. 6). Hence our estimation suggests that for a fleet of 70 gliders the uncertainty due to oceanic initial condition can be reduced by 90% for the first 5 years and by 80% for 10 years. Even with only 15 gliders the error is already reduced by more than 70% on time scales from 1 to 5 years.

Relative reduction of the MVT error growth (due to oceanic initial condition uncertainty) as a function of monitored area following the optimal monitoring system (vertical average of Fig. 4b1, c1). For comparison we have computed the glider fleet number required to cover the monitored area in a month, assuming a 0.25 m per second travel speed (Wood 2010; Gafurov and Klochkov 2015) and a 160 km spatial correlation of observations (Purkey and Johnson 2010). (Gliders are assumed to reach the bottom of the ocean, as now possible with Deepgliders.) Thick solid line is 50%, and thin dashed and solid lines are for lower and higher values, respectively; contour interval is 10%

5 Discussion and conclusion

In this study, we have focused on the North Atlantic to assess the predictability of four ocean metrics: the AMOC intensity (MVT at 50\(^\circ\)N and above 1500 m depth), the intensity of its heat transport (MHT at 25\(^\circ\)N), the spatially-averaged SST over the North Atlantic (from 30\(^\circ\)N to 70\(^\circ\)N), and the spatial and depth averaged North Atlantic ocean temperature (from 30\(^\circ\)N to 70\(^\circ\)N). Here we propose a theoretical framework to quantitatively assess the growth of small perturbations.

Following the study of Chang et al. (2004), we have developed an exact expression of the ocean predictability for given metrics under 3 main assumptions. (1) The uncertainty remains small (linear assumption); (2) the uncertainty follows a normal distribution (independence of uncertainties); and (3) the ocean dynamics can be treated in a forced context (absence of explicit ocean–atmosphere feedback). In addition, this theoretical result allows us to separate the sources of uncertainty. We are thus able to attribute on a dynamical ground the relative role of internal oceanic initial condition uncertainty and of external atmospheric synoptic noise on ocean prediction uncertainty. After illustrating the method in an idealized model where analytic solutions are known (Hasselmann 1976) the method has been applied to a state-of-the-art GCM (NEMO, Madec et al. 1998) in its 2\(^\circ\) realistic configuration (ORCA2, Madec and Imbard 1996). Given the importance of the model uncertainty on the time scales studied (Hawkins and Sutton 2009), it is worth noting that the single model approach used in this study limits the generalization of our results. Hence our developed framework needs to be applied to other ocean GCMs as well. In particular the location and intensity of deep convection, that is crucial for meridional volume transport (Sévellec and Fedorov 2015) might be strongly model-dependent, potentially modulating the optimal monitoring system.

Our analysis suggests that spatially-averaged SST uncertainty is strongly dominated by the atmospheric synoptic noise (Fig. 3b3), with a strong impact at all time scales, suggesting the limited predictability of this metric. The three other metrics (MVT, MHT and OHC, Fig. 3b1, 2 and 4) are dominated (\(\sim\) 80%) by oceanic initial condition uncertainty on interannual time scales (< 5 years). Whereas the Predictive Power of OHC is monotonically decreasing suggesting the higher predictability of shorter time scales, MVT and MHT predictive power features a “sweet spot” at interannual time scales (Fig. 3c). This means that MVT and MHT are especially predictable on 5–10 years time scales where the signal to noise ratio peaks.

These results were obtained by focusing on the propagation of the error measured through the variance of its probability density function. We have shown that we can also compute solutions for other statistical moments, and so potentially reconstruct the entire probability density function. However, we are limited by two assumptions that restrict the generalization of our solutions. The first assumption is on the structure of the atmospheric stochastic forcing and of oceanic initial condition uncertainty. Assuming a Gaussian white noise leads to simplification in the mathematical treatment of the problem and allows an analytic solution. Hence, by construction our theoretical solution and its numerical application with the ocean GCM consider only random spatially-uncorrelated errors for the oceanic initial condition uncertainties. However initial conditions uncertainties, which are often derived from ocean reanalysis for operational prediction systems, might not have such a useful property. They are often spatially correlated. This might change our results. In particular, this might eliminate the important interannual uncertainties for MHT and MVT due to rapid unbalanced errors. Hence, it would be ideal to generalize our theoretical result to a more general uncertainty. This will be a direction for future investigation. The other assumption is on the linearity of the dynamics which limits the behaviour that can be represented. For instance, the possible occurrence of qualitative changes in the probabilistic distribution (e.g., P-bifurcation), such as the change from an unimodal to a bi-modal distribution, cannot be captured. In this context other methods should be used such as the pullback attractor (e.g., Ghil et al. 2008) or transfer operator (e.g., Tantet et al. 2015) techniques. However they appear to be still computationally expensive and are currently only applicable for idealized models.

The dominance of oceanic initial condition uncertainty in the overall MVT, MHT and OHC uncertainty strongly suggests the possible improvement of predicting these metrics. This source of uncertainty can be reduced by an accurate monitoring of the oceanic state. By using LOPs to design an optimal monitoring system, reduction of such uncertainty is accomplished. Such monitoring systems can be done by the deployment of a suited mooring array or repeated transects at 35\(^\circ\)N and 55\(^\circ\)N. Alternatively, a fleet of gliders might be able to efficiently sample the important regions. We estimate that a fleet of 15 gliders can reduced the uncertainty due to oceanic initial condition by more than 70% on time scales from 1 to 5 years. Also, since gliders are a type of autonomous robotic vehicle, they can both monitor the suggested region and be re-oriented on the fly as the optimal monitoring system evolves. At an operational level our method coupled to a glider fleet would provide a self-adaptative network specifically designed for improving a prediction system.

The spatial resolution of the ocean model used here restricts the study of ocean dynamics to non-eddying regimes. Hence testing the role of oceanic mesoscale eddies is a direction for future work, that would require the extension of the current method to a fully nonlinear framework (following conditional nonlinear optimal peturbations by Mu and Zhang 2006; Li et al. 2014, for instance). The ocean-only forced context of our analysis neglects the impact of large scale atmospheric feedbacks. However, Sévellec and Fedorov (2017) showed that the LOPs are only marginally modified by the ocean surface boundary conditions and Germe et al. (2017a) demonstrated that the overall expected behaviour of LOPs is conserved in a coupled context, despite a reduction of impact. We anticipate that both air-sea interaction and ocean turbulence impact the error growth. The “sweet spot” around 5–10 years for MVT and MHT, showing a minimum in uncertainty in the laminar ocean model, suggests that predicting these time scales in a fully coupled and eddy-resolving climate model is probably most valuable in terms of signal-to-noise ratio and that further development of the method outlined here to more complex Earth System Models is a promising route for improving climate predictions.

References

Alexander J, Monahan AH (2009) Nonnormal perturbation growth of pure Thermohaline Circulation using a 2D zonally averaged model. J Phys Oceanogr 39:369–386

Baehr J, Piontek R (2014) Ensemble initialization of the oceanic component of a coupled model through bred vectors at seasonal- to-interannual timescales. Geosci Model Dev 7:453–461

Boer GJ (2011) Decadal potential predictability of twenty-first century climate. Clim Dyn 36:1119–1133

Branstator G, Teng H (2012) Two limits of initial-value decadal predictability in a CGCM. J Clim 23:6292–6311

Branstator G, Teng H (2014) Is AMOC more predictable than north Atlantic heat content? J Clim 27:3537–3550

Chang J et al (2004) Predictability of linear coupled systems. Part I: theoretical analyse. J Clim 17:1474–1486

Chylek P et al (2011) Ice-core data evidence for a prominent near 20 year time-scale of the Atlantic Multidecadal Oscillation. Geophys Res Lett 38(L13):704

Collins M, Sinha B (2003) Predictability of decadal variations in the thermohaline circulation and climate. Geophys Res Lett 30:1306

Collins M et al (2006) Interannual to decadal climate predictability in the North Atlantic: a multimodel-ensemble study. J Clim 19:1195–1203

Debruyères DG et al (2016) Global and full-depth ocean temperature trends during the early 21st century from argo and repeat hydrography. J Clim 30:1985–1997

Deser C et al (2012) Uncertainty in climate change projections: the role of internal variability. Clim Dyn 38:527–546

Dijkstra HA (2013) Nonlinear climate dynamics. Cambridge University Press, Cambridge, p 357

Drijfhout S (2015) Competition between global warming and an abrupt collapse of the AMOC in Earth’s energy imbalance. Sci Rep 5(14):877

Du H et al (2012) Sensitivity of decadal predictions to the initial atmospheric and oceanic perturbations. Clim Dyn 39:2013–2023

Dunstone NJ, Smith DM (2010) Impact of atmosphere and sub-surface ocean data on decadal climate prediction. Geophys Res Lett 37(L02):709

Farrell BF, Ioannou PJ (1996a) Generalized stability theory. Part I: autonomous operators. J Atmos Sci 35:2025–2040

Farrell BF, Ioannou PJ (1996b) Generalized stability theory. Part II: nonautonomous operators. J Atmos Sci 53:2041–2053

Frankcombe LM, Dijkstra HA (2009) Coherent multidecadal variability in North Atlantic sea level. Geophys Res Lett 36(L15):604

Frankignoul C, Hasselmann K (1977) Stochastic climate models, Part II. Application to sea-surface temperature anomalies and thermocline variability. Tellus 29:289–305

Gafurov SA, Klochkov EV (2015) Autonomous unmanned underwater vehicles development tendencies. Procedia Eng 106:141–148

Germe A et al (2017a) Impacts of the North Atlantic deep temperature perturbations on decadal climate variability and predictability. Clim Dyn (submitted)

Germe A et al (2017b) On the robustness of near term climate predictability regarding initial state uncertainties. Clim Dyn 48:353–366

Ghil M, Chekroun M, Simonnet E (2008) Climate dynamics and fluid mechanics: natural variability and related uncertainties. Phys D 237:2111–2126

Griffies SM, Bryan K (1997) A predictability study of simulated North Atlantic multidecadal variability. Clim Dyn 13:459–487

Hasselmann K (1976) Stochastic climate models. Part I: Theory. Tellus 28:473–485

Hawkins E, Sutton R (2009) The potential to narrow uncertainty in regional climate predictions. Bull Am Meteorol Soc 90:1095–1107

Hawkins E, Sutton R (2011) Estimating climatically relevant singular vectors for decadal predictions of the Atlantic Ocean. J Clim 24:109–123

Hawkins E et al (2016) Irreducible uncertainty in near-term climate projections. Clim Dyn 46:3807–3819

Heimbach P et al (2011) Timescales and regions of the sensitivity of atlantic meridional volume and heat transport: toward observing system design. Deep Sea Res Part II 58:1858–1879

Hermanson L, Sutton L (2010) Case studies in interannual to decadal climate predictability. Clim Dyn 35:1169–1189

Hurrell J et al (2006) Atlantic climate variability and predictability: a CLIVAR perspective. J Clim 19:5100–5121

IPCC (2007) Climate change 2007—the physical science basis: contribution of Working Group I to the Fourth Assessment Report of the IPCC. Cambridge University Press, Cambridge

IPCC (2013) Climate change 2013—the physical science basis: contribution of Working Group I to the Fifth Assessment Report of the IPCC. Cambridge University Press, Cambridge

Keenlyside NS et al (2008) Advancing decadal-scale climate prediction in the North Atlantic sector. Nature 453:84–88

Kushnir Y (1994) Interdecadal variations in North Atlantic sea surface temperature and associated atmospheric conditions. J Clim 7:141–157

Latif M et al (2006) A review of predictability studies of Atlantic sector climate on decadal time scales. J Clim 19:5971–5986

Leutbecher M et al (2002) Potential improvement to forecasts of two severe storms using targeted observations. Q J R Meteorol Soc 128:1641–1670

Li Y, Peng S, Liu D (2014) Adaptive observation in the South China Sea using CNOP approach based on a 3-D ocean circulation model and its adjoint model. J Geophys Res. doi:10.1002/2014JC010 220

Lorenz EN (1963) Deterministic non-periodic flow. J Atmos Sci 20:130–141

Lozier MS et al (2016) Overturning in the Subpolar North Atlantic Program: a new international ocean observing system. Bull Am Meteorol Soc 98:737–752

Madec G, Imbard M (1996) A global ocean mesh to overcome the North Pole singularity. Clim Dyn 12:381–388

Madec G, et al (1998) OPA 8.1 ocean general circulation model reference manual. Tech. Rep., Institut Pierre-Simon Laplace (IPSL), France, No. 11, p 91

McCarthy G et al (2012) Observed interannual variability of the Atlantic meridional overturning circulation at 26.5\(^\circ\)N. Geophys Res Lett 39(L19):609

Meehl GA et al (2009) Decadal prediction: can it be skillful? Bull Am Meteorol Soc 90:1467–1485

Mignot J et al (2013) On the evolution of the oceanic component of the IPSL climate models from CMIP3 to CMIP5: a mean state comparison. Ocean Modell 72:167–184

Montani A et al (1999) Forecast skill of the ECMWF model using targeted observations during FASTEX. Q J R Meteorol Soc 125:3219–3240

Msadek R et al (2010) Assessing the predictability of the Atlantic meridional overturning circulation and associated fingerprints. Geophys Res Lett 37(L19):608

Mu M, Zhang Z (2006) Conditional nonlinear optimal perturbations of a two-dimensional quasigeostrophic model. J Atmos Sci 63:1587–1604

Palmer TN (1999) A nonlinear dynamical perspective on climate prediction. J Clim 12:575–591

Persechino A et al (2013) Decadal predictability of the Atlantic meridional overturning circulation and climate in the IPSL-CM5A-LR model. Clim Dyn 40:2359–2380

Pohlmann H et al (2004) Estimating the decadal predictability of coupled AOGCM. J Clim 17:4463–4472

Purkey SG, Johnson GC (2010) Warming of global abyssal and deep southern ocean waters between the 1990s and 2000s: contributions to global heat and sea level rise budgets. J Clim 23:6336–6351

Qin X, Mu M (2011) Influence of conditional nonlinear optimal perturbations sensitivity on typhoon track forecasts. R Meteorol Soc Q J. doi:10.1002/qj.902

Schneider T, Griffies SM (1999) A conceptual framework for predictability studies. J Clim 12:3133–3155

Sévellec F, Ben Jelloul M, Huck T (2007) Optimal surface salinity perturbations influencing the thermohaline circulation. J Phys Oceanogr 37:2789–2808

Sévellec F, Fedorov AV (2013) The leading, interdecadal eigenmode of the Atlantic meridional overturning circulation in a realistic ocean model. J Clim 26:2160–2183

Sévellec F, Fedorov AV (2015) Optimal excitation of AMOC decadal variability: links to the subpolar ocean. Prog Oceanogr 132:287–304

Sévellec F, Fedorov AV (2017) Predictability and decadal variability of the North Atlantic ocean state evaluated from a realistic ocean model. J Clim 30:477–498

Sévellec F, Sinha B (2017) Predictability of decadal Atlantic Meridional overturning circulation variations. Oxford Research Encyclopedia of Climate Science (submitted)

Strogatz SH (1994) Nonlinear dynamics and chaos with applications to physics, biology, chemistry and engineering. Advanced book program, Perseus book, p 498

Tantet A, van der Burgt FR, Dijkstra HA (2015) An early warning indicator for atmospheric blocking events using transfer operators. Chaos 25(036):406

Taylor KE, Stouffer RJ, Meehl GA (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93:485–498

Teng H, Branstator G, Meehl GH (2011) Predictability of the Atlantic overturning circulation and associated surface patterns in two CCSM3 climate change ensemble experiments. J Clim 24:6054–6076

Tziperman E, Ioannou PJ (2002) Transient growth and optimal excitation of thermohaline variability. J Phys Oceanogr 32:3427–3435

Weaver AT, Vialard J, Anderson DLT (2003) Three- and four-dimensional variational assimilation with a general circulation model of the tropical Pacific Ocean. Part 1: formulation, internal diagnostics and consistency checks. Mon Weather Rev 131:1360–1378

Wood S (2010) Autonomous underwater gliders. Underwater vehicles. Tech. Rep, Florida Institute of Technology

Wunsch C (2010) Observational network design for climate. In: OceanObs2009 plenary papers, 1. doi:10.5270/OceanObs09.pp.41

Zanna L (2012) Forecast skill and predictability of observed Atlantic sea surface temperatures. J Clim 25:5047–5056

Zanna L, Tziperman E (2008) Optimal surface excitation of the thermohaline circulation. J Phys Oceanogr 38:1820–1830

Zhou F, Mu M (2011) The impact of verification area design on tropical cyclone targeted observations based on the CNOP method. Adv Atmos Sci 28:997–1010

Acknowledgements

This research was supported by the Natural and Environmental Research Council UK (SMURPHS, NE/N005767/1 to FS and SSD and DYNAMOC, NE/M005127/1 to AG), FS received support from DECLIC and Meso-Var-Clim projects funded through the French CNRS/INSO/LEFE program, and HAD received support from the Netherlands Center for Earth System Science (NESSC).

Author information

Authors and Affiliations

Corresponding author

Appendix: LOP computation

Appendix: LOP computation

Here, we summarize the calculation of the Linear Optimal Perturbation. The discussion closely follows a series of studies on similar topics (see Sévellec et al. 2007; Sévellec and Fedorov 2017, for instance).

To find the optimal perturbation associated to a metric \(\langle{\boldsymbol{F}}\) under the constraint of the LOP falling within the initial condition uncertainty (i.e., a global size constraint or an average local variance constraint), we define the Lagrangian function as:

where \(t_i\) is the initial time (when the optimal initial perturbation is applied), t is the maximization time (when the cost function reaches its maximum value), and \(\gamma\) is a Lagrange multiplier. Furthermore, \(\epsilon\) and \({{\mathsf {{S}}}}\) are a parameter and a norm, respectively, associated with the normalization constraint:

where \(\alpha\) and \(\beta\) are the thermal expansion and haline contraction coefficients, respectively; and dv and V are the unit and total ocean volumes (note that \({{\mathsf {{S}}}}\) is invertible since it represents a norm). That is, \(\epsilon\) measures the magnitude of the initial perturbation and restricts the perturbation to be within a globally average initial uncertainty and is set to \(\epsilon\)/\(\alpha\) = 1 mK. The goal here is to maximize the cost function subject to this normalization constraint.

Linear optimal perturbations of the MVT metrics. a The MVT response to LOP is computed for delay ranging from 0 to 40 years. b–e Four examples of LOP for delay of 2, 5, 10 and 20 years, as indicated by the vertical lines in a: b-e1 0–2056 m average temperature, b-e2 2056–4001 m average temperature, b-e3 0–2056 m average salinity, b-e4 2056–4001 m average salinity. For comparison all LOPs are normalized by \(\sqrt{\left\langle {{\varvec{u}}^\mathrm{opt}(t_i)|{{\mathsf {{S}}}}|{\varvec{u}}^\mathrm{opt}(t_i)} \right\rangle }\)/\(\alpha\) = 1 mK

As Fig. 7 but for the MHT metric

As Fig. 7 but for the SST metric

As Fig. 7 but for the OHC metric

From expression (20) and the optimization condition \(\delta \mathcal {L}\)= 0 the optimal initial perturbations are computed as

Hence we can bound the impact of the initial disturbances as:

Examples of LOPs and their predicted impact are displayed in Figs. 7, 8, 9, and 10, using MVT, MHT, SST, and OHC for their cost functions, respectively. They have been studied in detail by Sévellec and Fedorov (2017). Here, we summarize their relevant behaviours for this study. Depending on the cost function, LOPs are dramatically different. For OHC and SST, LOPs are basin-scale anomalies acting most efficiently on interannual to decadal time scales (Figs. 9, 10); for MVT and MHT, together with their basin-scale signature LOPs have also a small spatial-scale signature and act most efficiently instantaneously (Figs 7, 8). This can be rationalized by the intrinsic characteristics of the metrics. Whereas, MVT and MHT are virtually equivalent to East–West density differences (that can be induced by both small and large spatial-scale disturbances), OHC and SST are basin-scale spatial-averages of temperature field (filtering-out their sensitivity to small spatial-scale disturbances). All the LOPs have important signature in the deep ocean (included below 2000 m).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sévellec, F., Dijkstra, H.A., Drijfhout, S.S. et al. Dynamical attribution of oceanic prediction uncertainty in the North Atlantic: application to the design of optimal monitoring systems. Clim Dyn 51, 1517–1535 (2018). https://doi.org/10.1007/s00382-017-3969-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-017-3969-2